Re: Bot or Not? – Are you suffering from ‘bot clicks’?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are a marketer, you probably have heard about the somewhat new and emerging enemy: The Email Security Bots. The war is on and we have been standing clueless for a while, watching from the side while the Bots were messing with our numbers, with no real solution available.

Well, it’s time to fight back!

It all started a couple of years ago when some of our customers noticed a surge in email click metrics and they also pointed out some interesting and strange behaviors in their data:

- Seconds after email is delivered, they see a high volume of clicks

- They noticed a high volume of clicks from the same account/company

- The activity log shows ‘clicked a link in an email’ before the ‘email open’ event and in many cases without visiting the actual page

Our research concluded those behaviors are typical for Bots, and NOT for humans. The bots' role is to click each link in emails, sent to the domain they protect, to prevent harmful clicks that can harm the company by flagging them as a phishing scam.

The implications of those Bots clicks can be devastating for marketing teams worldwide.

All your marketing numbers could be way off. It means you’ve been counting clicks completely wrong in your marketing automation program. Not to mention the impact on your scoring, interesting moments and nurturing campaigns and obviously your reports.

We, at eDigital.Marketing, did an extensive research and came up with a solution that we would like to share with you. We implemented it in our customers' instances and our customers are surprised by the findings and are satisfied with the results.

We started by running a test on an email that was part of a nurturing campaign already built in Marketo.

The test was using a Smart Campaign that was listening to page visits and email delivery.

The program was running for about five days to allow enough time for prospects to actually click on the link in the email.

Five days later we ran a new Smart Campaign just to collect data from Marketo about "clicked the link in the email" without any filters at all.

We downloaded both lists to excel and checked for clicks in Marketo that were NOT in the list we have created. Here are the shocking numbers:

Marketo counted 327 clicks WHILE the Smart Campaign only identified 91 of those clicks as real people who clicked the link and actually visited the page.

So at that point, it was pretty simple to calculate that approximately 72% of those clicks were fake and were made by ‘Bots’, we then identified and created a list of the companies that are using bots as part of their IT security infrastructure.

To make things even more complicated, we then went ahead and made some additional research on the list and found that a third of the remaining ‘humans’ can NOT be counted as clicked anyway since they visited the webpage in the past and NOT by using the email we've tested. The Smart Campaign was flagging them since the email was delivered to them and indeed in the past, they visited the page.

BUT since we compared the Marketo clicks to the Smart Campaign clicks, those ‘humans’ (that didn't click but visited the page) where excluded and therefore no extra calculating was needed.

To make sure the data is correct, we sampled some ‘Bot’ leads and checked their logs in Marketo. All leads from all companies who were suspected to be using ‘bots’ were showing the activity of a ‘bot’ - clicked but no open nor visited page.

In conclusion, out of 327 clicks identified by Marketo, only 91 were Humans.

Now all was left for us to do is to add a few Smart Campaigns to neutralize the Bots and stop them from disrupting the scoring system, the interesting moments and all reporting.

We now have a Bot system running in the background making sure all our numbers are correct and not just making us look good.

- Labels:

-

Email Marketing

-

Marketing

-

Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just replying to Dan's post..

But now that we're faced with a third variable - and since Marketo doesn't support conditional "choice" logic (where we would need a second level choice of "clicked link on specific web page") - we're kinda stuck. Heck, there's not even a choice that allows you to select the link constrained by a web page. I believe Sanford has been toying with a custom activity that would identify when all three of these actions have occurred, but nothing's been published as of yet.

1. Checking the network traffic in chrome, the custom munchkin event does fire and the made up page is also pre-populating in visited webpage dropdowns in Marketo

2. This is just a suggestion to which the goal was to track 1. Email click -> 2. Visited webpage -> 3. Clicked link on webpage. But all in one session starting with the email click.

3. Again, this would let you track 1, 2, and 3 above without worrying about historical click activities. Plus I suppose it'd make things easier because you can just listen for a single visited webpage activity

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Checking the network traffic in chrome, the custom munchkin event does fire and the made up page is also pre-populating in visited webpage dropdowns in Marketo

You haven't tested this sufficiently. The definition of an async XHR is that it cannot be guaranteed to finish before the page unloads. Sometimes an XHR will complete, sometimes not. Network conditions, the speed of the subsequent page's TTFB, and browser heuristics are all involved. That uncertainty is not up for debate, it's been understood for like 15 years now, and it's the reason that the alternative Beacon API exists (in some browsers, unfortunately not all). Munchkin doesn't use beacons unless you use my adapter but even so will never work in Safari and IE.

2. This is just a suggestion to which the goal was to track 1. Email click -> 2. Visited webpage -> 3. Clicked link on webpage. But all in one session starting with the email click.

If you change the goal to "There must be a deliberate human interaction after the pageview" that's a new goal, and you don't need to do a fake Visit Web Page for for that. But we can't keep moving the goalposts of what a Click Email represents, or used to represent!

3. Again, this would let you track 1, 2, and 3 above without worrying about historical click activities. Plus I suppose it'd make things easier because you can just listen for a single visited webpage activity

You can already listen for a single Click Link activity constrained by the web page, which will always be logged for the same click event you're adding a second event listener for. I'm not understanding what else you get from a fake Visit Web Page, even if it were made reliable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not a perfect solution, but maybe a way around a 'fake' page view being triggered is to use the forms 2.0 API - embed a hidden form in the page and check for the form submission in the campaign instead of a page view (include the URL of the page as a hidden field in the form and you can easily re-use). Then, depending where the page is hosted (either on your own server or a mkto landing page), you can also add a layer of checks to make sure it's a real browser before allowing that form script to run - eg check user agent header, maybe a delay of a second, assuming a bot's session is very short - of course, if a bot really wants to mimic a human, then it's going to be pretty hard to detect, but that should help weed out the most obvious ones.

Note: if the page is accessible publicly as well as from your email, then you'll also likely want to add some conditions around loading the form script so that it only runs for known users, otherwise, you'll end up with a whole lot of unidentified users that count towards your allocated users (because as soon as a form is submitted, it counts as an identified user, even if name and email are blank)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

of course, if a bot really wants to mimic a human, then it's going to be pretty hard to detect

Right, and the inability to distinguish between the two is key to scanner technology working at all. You can't distinguish based on User-Agent because scanners purposely use real, headless browsers. If you could check based on the User-Agent, then so could the person running a malicious site, and the scanner would be rendered worthless.

If you require form submission before registering the initial hit, then it's even easier than what you've described! Problem is that marketers want to know if someone clicked, even if they don't convert via form. That's the hard part.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Dan Stevens -

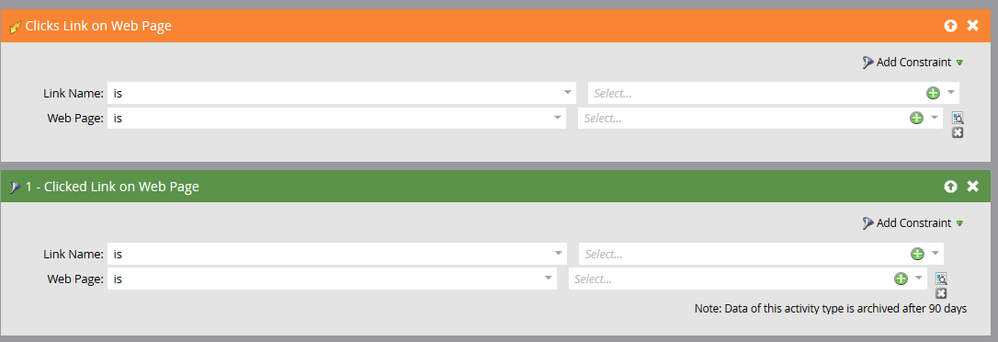

Re: "Heck, there's not even a choice that allows you to select the link constrained by a web page", I'm sure you know that there IS a constraint for web page for the trigger/filter clicks/clicked link on web page (see below) so I'm wondering what you meant by that.

The limitations in the flow step choice logic have often frustrated me too. Have you considered using "Member of Smart List" as the Choice in Change Program Flow step and putting your multiple criteria in the Smart List?

Denise

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good idea! I just voted for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ahhh yea this makes sense. Agreed on the previous visitors issue. Conditional choice logic would be amazing, the trouble I face is I really want to use the "Clicked Link" trigger, but want to wait for a period of time after that activity and wait for the 2nd or 3rd layer activity like you're describing here (page view, page click, form fill, etc.). I can watch page activity and use querystring filters to identify users who visit pages via email clicks, but that activity isn't associated with the email program and the trigger is now the "Visited Webpage," which doesn't help our sales team understand what content or marketing campaign is driving engagement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

bots can trigger web page visits as well.

Ab-so-lutely!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agree.

I'd love to see a clearer set of images or diagrams for how you did this Ronen.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes! I appreciate learning about what you discovered but I feel like you yada yada yada'd past the good part!

Marketo Champion & Adobe Community Advisor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We've definitely seen the same issue. It's fairly isolated in our industry and we see bot-clicks in a pretty specific region, but our numbers are even farther off than the 72% you're seeing. We're talking 93-96% inaccurate.

We've since started utilizing the page view as the trigger for those groups as well which has helped, but the biggest issue for me is associating those clicks (web views) back to the program level in an automated way. Since the clicked link in email activity has a program ID associated, but the web page visit activity log will not.

It's a serious issue that Marketo itself needs to bake into the platform. We've been trying to get cleaner data with our own workarounds, but it really only works in a program-to-program basis and haven't been able to come up with an instance wide solution that we could set up to have running all the time.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices