Re: Random Sample + Other Choices

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

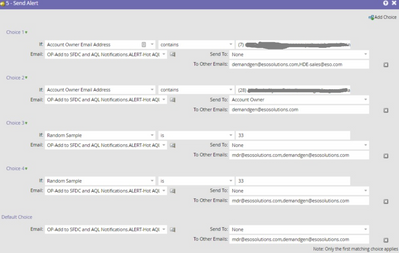

Hello, I want to route alerts to my sales team when an account has assigned ownership but then round robin to our MDR team otherwise. I wrote the following logic but it is sending alerts to the MDRs twice. Is it something with the combo of specific choices + random sample? Or other ideas? User error is the most likely answer but I can't eye-spy my error.

What's stranger, the next step in the flow isn't occurring. Weird right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would always split this flow step up:

- First step to send the alert to people where the assigned owner is known (with a Do nothing default)

- Process these people for any other flow step that comes after the alert (or do those flow steps first, before the alert)

- Then remove the ones already alerted from the flow and do the round robin alert.

Alternatively, you can do a request campaign with two options (if account owner known request campaign A, if unknow request campaign B with the round robin).

Which would be the better option depends on the rest of your flow.

The reason? The round Robin will calculate the 33% based on the entire group going into your flow step, not the audience left after choice 1 and 2.

I can't really explain the double alerts based on what I see, but the rest of your smart campaign might explain that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Katja_Keesom, let me see if I understand. Let's say I have 9 people, 6 assigned to someone listed in choices 1 and 2. My questions are

- Are you saying all 9 people will run through the random sample choice(s)? That contradicts how I thought choices worked where once a person meets the criteria for one choice, that step happens and then it moves to the next flow step.

- Do I need 1 random sample step (plus default) or 2 random sample steps (plus default) to roundrobin through 3 people?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not all 9 people will go through the round robin choices in the flow step as you set it up. What will happen is:

- People meeting your choice 1 requirement will go through choice 1.

- Of the remaining people, the ones meeting your choice 2 will go through that.

- Of the remaining people 33% of 9 - so three people will go through choice 3, your first round robin.

- No people are left for choice 4.

So the round robin will calculate your percentage based on the total of people going into the flow step in the first place.

With a round robin I would define all minus one of your groups and let the remainder go through your Default choice. So in your case I would set up two round robin choices and let the remainder go through the Default step. That is to cover for rounding issues and to make sure everyone actually does get assigned.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

People meeting your choice 1 requirement will go through choice 1.

Of the remaining people, the ones meeting your choice 2 will go through that.

Of the remaining people 33% of 9 - so three people will go through choice 3, your first round robin.

Don't think this is so, Katja. Random Sample is indeed weird, but it's not weird in this particular way.

If there are N remaining people before entering the first Random Sample, the final distribution will be N/3 - N/3 - N/3.

It isn't round-robin, though — it's not guaranteed to rotate to a new Random Sample bucket each time a new person qualifies. It could be A-A-B-C-B-C-A-B-C, but it eventually ends up 3A-3B-3C in any case.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I actually had a practical case this week where I could validate this. The round Robin keeps taking the original volume going into the flow step for each consecutive choice. Willing to validate it again whenever I come into the situation next, but as my experience was fresh I was confident this is how it works.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I verified it right before I posted.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

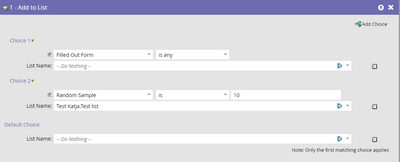

I verified just now. Ran this flow step on an audience of 893, out of which 337 people filled out a form. The list still ended up with exactly 89 people added. If the Filled out form had been deducted, it should have been 55 or 56.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect there's something amiss with your test.

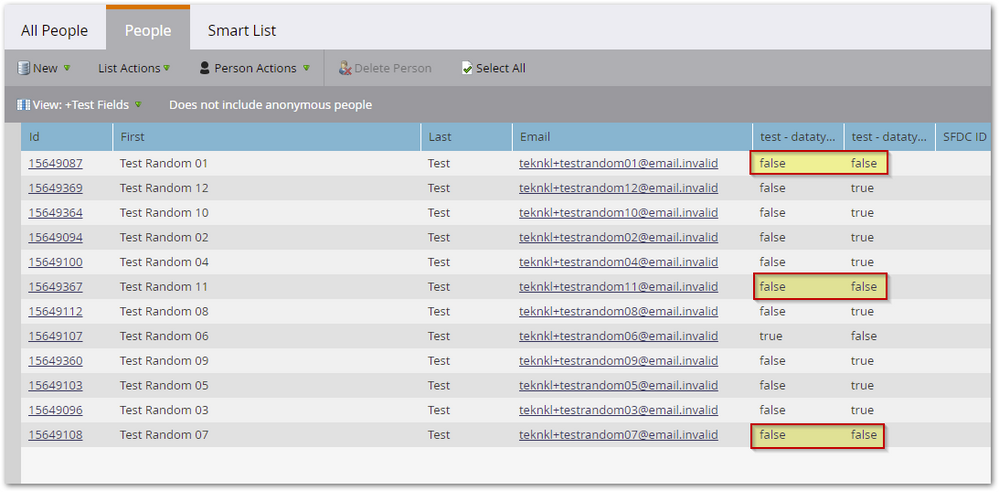

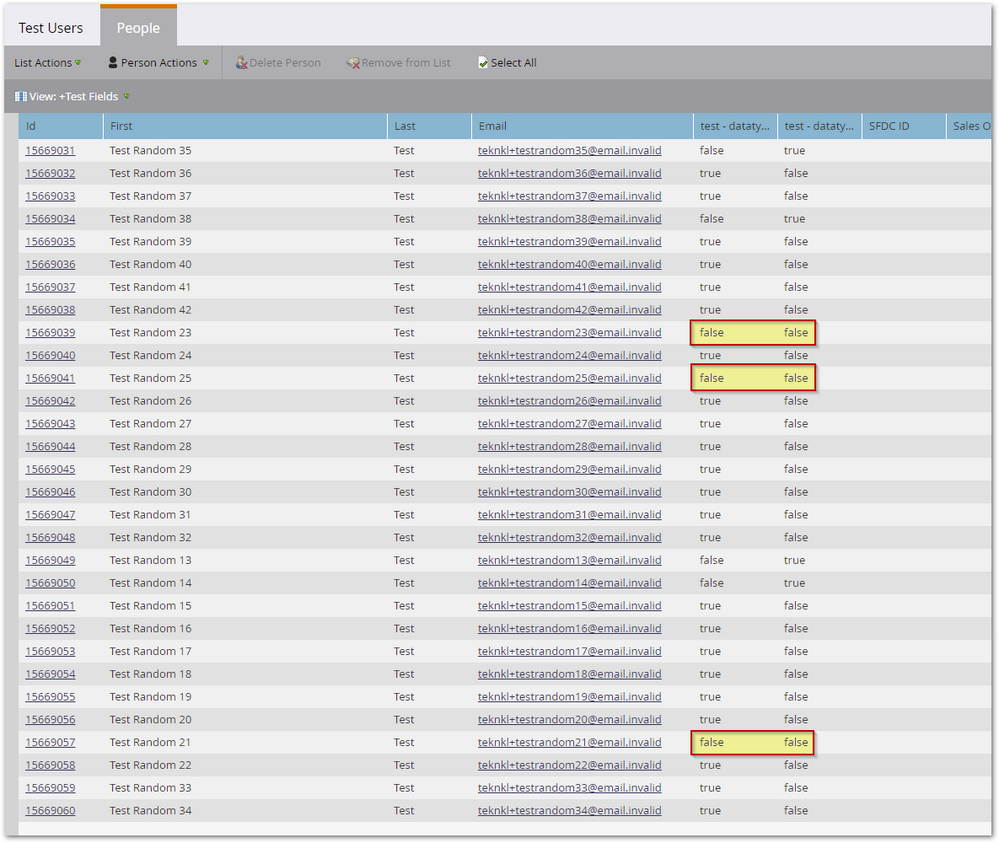

Consider this cohort of 12 test leads:

Only the 3 highlighted leads have two Boolean fields both set to false. The other 9 leads have one or the other of those fields set to true.

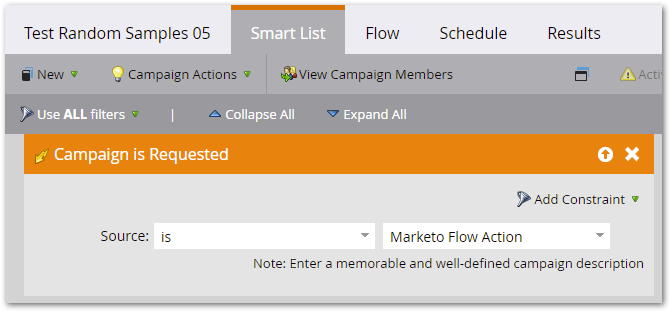

Now check out a test Smart Campaign. It's requestable:

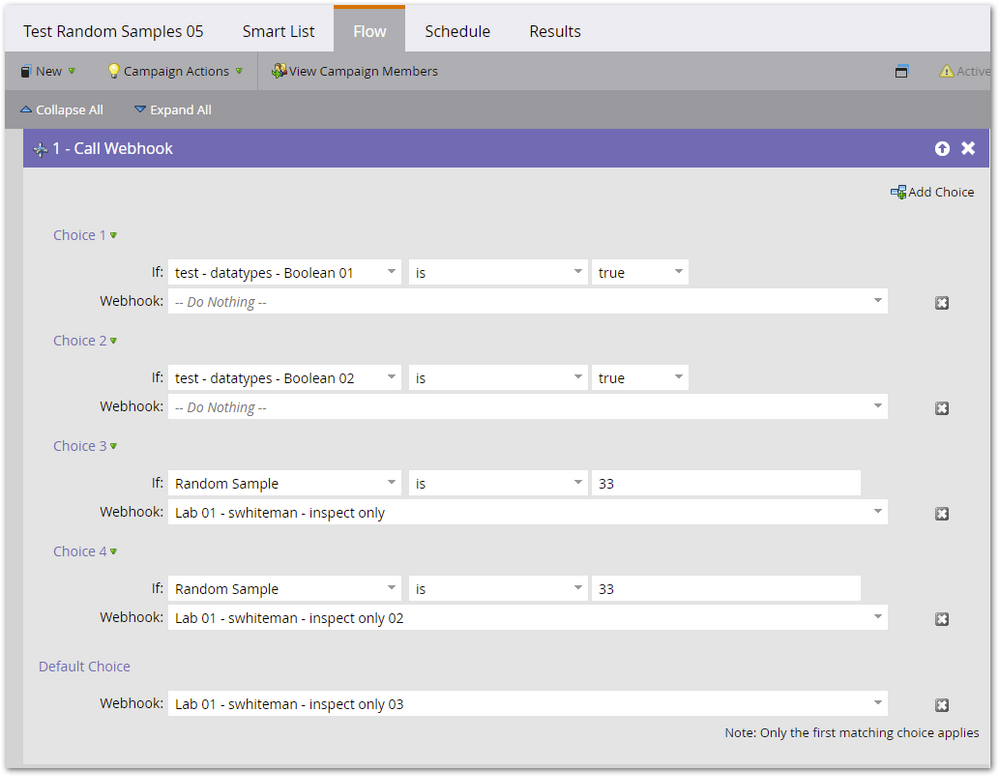

And has this Flow:

Choices 1 and 2 exempt anyone who has either of the 2 Booleans set to true. That'll be 9 people who don't make past Choice 2.

Choices 3, 4, and 5 are intended to distribute the remaining 3 leads across the 3 different webhooks. (Naturally this could have been any Flow action with 3 different variations, I chose Call Webhook since webhooks are my thing! For security purposes this test webhook responds with an HTTP 405.)

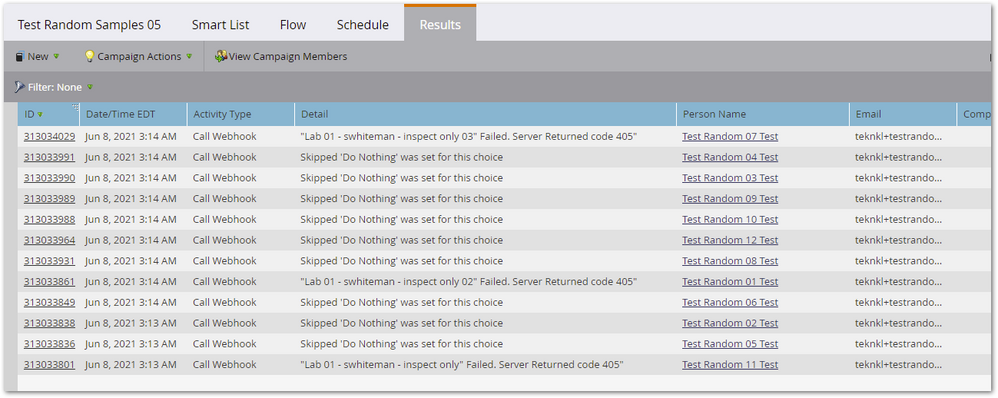

I selected the 12 leads and ran them through Request Campaign. Here's the Results tab:

As you can see, the 3 leads who made it past Choice 2 qualified for Choice 3, Choice 4, and the Default Choice, respectively.

Now let's run again with a larger cohort. Now it's 30 leads, 3 of whom should get past Choice 2.

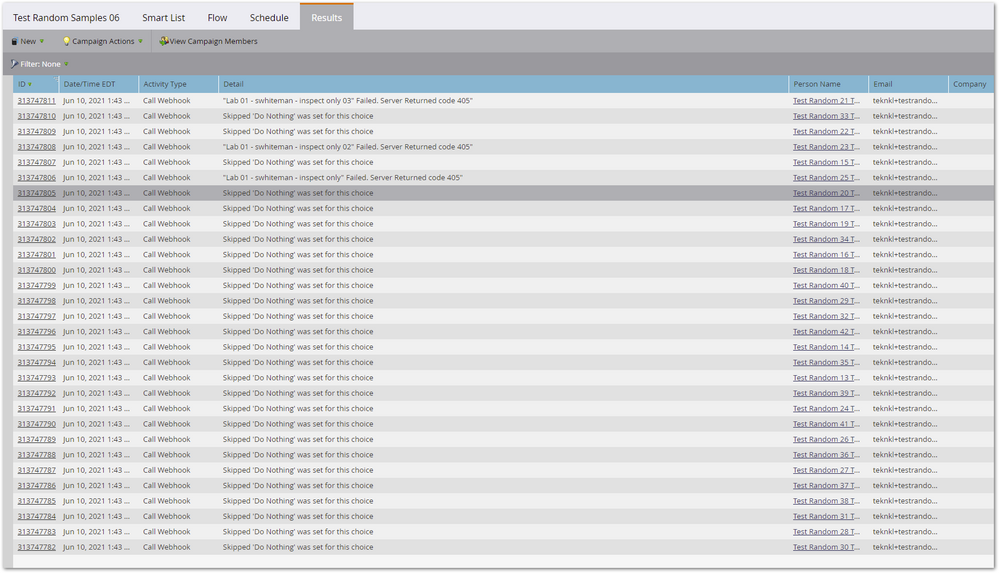

The result again is that those 3 are distributed evenly across Choice 3, Choice 4, and Default Choice:

Now, we don't actually know from these results what the underlying algorithm is doing; that's the problem with trying to reverse-engineer something that isn't completely documented. But we can tell that what it's not doing, which is putting the remaining people all in Choice 3.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can't argue with that, but can't reconcile the two test results either. Weird.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices