Re: Bot or Not? – Are you suffering from ‘bot clicks’?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are a marketer, you probably have heard about the somewhat new and emerging enemy: The Email Security Bots. The war is on and we have been standing clueless for a while, watching from the side while the Bots were messing with our numbers, with no real solution available.

Well, it’s time to fight back!

It all started a couple of years ago when some of our customers noticed a surge in email click metrics and they also pointed out some interesting and strange behaviors in their data:

- Seconds after email is delivered, they see a high volume of clicks

- They noticed a high volume of clicks from the same account/company

- The activity log shows ‘clicked a link in an email’ before the ‘email open’ event and in many cases without visiting the actual page

Our research concluded those behaviors are typical for Bots, and NOT for humans. The bots' role is to click each link in emails, sent to the domain they protect, to prevent harmful clicks that can harm the company by flagging them as a phishing scam.

The implications of those Bots clicks can be devastating for marketing teams worldwide.

All your marketing numbers could be way off. It means you’ve been counting clicks completely wrong in your marketing automation program. Not to mention the impact on your scoring, interesting moments and nurturing campaigns and obviously your reports.

We, at eDigital.Marketing, did an extensive research and came up with a solution that we would like to share with you. We implemented it in our customers' instances and our customers are surprised by the findings and are satisfied with the results.

We started by running a test on an email that was part of a nurturing campaign already built in Marketo.

The test was using a Smart Campaign that was listening to page visits and email delivery.

The program was running for about five days to allow enough time for prospects to actually click on the link in the email.

Five days later we ran a new Smart Campaign just to collect data from Marketo about "clicked the link in the email" without any filters at all.

We downloaded both lists to excel and checked for clicks in Marketo that were NOT in the list we have created. Here are the shocking numbers:

Marketo counted 327 clicks WHILE the Smart Campaign only identified 91 of those clicks as real people who clicked the link and actually visited the page.

So at that point, it was pretty simple to calculate that approximately 72% of those clicks were fake and were made by ‘Bots’, we then identified and created a list of the companies that are using bots as part of their IT security infrastructure.

To make things even more complicated, we then went ahead and made some additional research on the list and found that a third of the remaining ‘humans’ can NOT be counted as clicked anyway since they visited the webpage in the past and NOT by using the email we've tested. The Smart Campaign was flagging them since the email was delivered to them and indeed in the past, they visited the page.

BUT since we compared the Marketo clicks to the Smart Campaign clicks, those ‘humans’ (that didn't click but visited the page) where excluded and therefore no extra calculating was needed.

To make sure the data is correct, we sampled some ‘Bot’ leads and checked their logs in Marketo. All leads from all companies who were suspected to be using ‘bots’ were showing the activity of a ‘bot’ - clicked but no open nor visited page.

In conclusion, out of 327 clicks identified by Marketo, only 91 were Humans.

Now all was left for us to do is to add a few Smart Campaigns to neutralize the Bots and stop them from disrupting the scoring system, the interesting moments and all reporting.

We now have a Bot system running in the background making sure all our numbers are correct and not just making us look good.

- Labels:

-

Email Marketing

-

Marketing

-

Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks all for the great, detailed discussions on this very aggravating topic. I've been trying to read up on all this for several days. I did not find it questioned/answered anywhere if this false clicks from email security software programs can click the same link multiple times. Does anyone know if that happens?

I have 3 clicks to the same link from one person. All clicks are within the first minute of the email being delivered. There is no following VWP activity. Only the 3 clicks. All the links in this email were clicked by this person within the first minute of delivery but this was the only linked they clicked 3 times.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...if this false clicks from email security software programs can click the same link multiple times. Does anyone know if that happens?

Oh, for sure. And not just multiple times in the short period after receiving the email, but when quarantines are rescanned it could happen at any time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow. This is a tough one. Thanks for the intel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sanford Whiteman & All -

I just posted this question but realized I did so to an old thread. Meant to post it here.

I'm wrestling with this issue, too, for my clients. It makes it really hard to evaluate the impact of emails unless they lead to a form. My latest idea is to only change someone's program status to clicked if they have clicked at least twice (or 3 times) on any of the links. E.g.:

Filter 1

Clicks link in email, email is A, link is A

Min number of times = 2

Filter 2

Clicks link in email, email is A, link is B

Min number of times = 2

etc.... a filter for each link (and filter logic is "any"). A bit of a nuisance to build when emails have multiple links. But what do you think? Wouldn't this tactic have a better chance of weeding out link scanners? I'm assuming, of course, that the first click is the link scanner but if there's a 2nd click it's a person.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Denise,

I get what you're going for here with this solution, and it's certainly an option to consider, but now you're docking those first-time REAL clicks. Instead of weeding out bot clicks, you're also weeding out actual, real life engagement.

For example, we've used some raw data from the database along with time stamps on the activity logs to try to identify frequent known-bot-click-domains (we're entirely B2B), and try to weed those out of reporting. We don't NOT send to those people, and we count the bot clicks overall, but we don't adjust lead score using those activities for contacts on those domains. We know we're filtering out some real activity, but we have decided that being confident in our leads being passed to sales is more important than having 100% accurate engagement numbers (so long as we're still driving value for the org as a whole).

Definitely not an easy problem to solve and I don't expect it to ever go away. Best of luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris,

Yes, you're right that I'd be docking (or just not crediting) the first-time real clicks. I was thinking of that as interim solution until I've devised a good method of identifying the known-bot-click-domains in our database. Since we mostly target large companies I have the impression just looking though "clicked" lists that most of them employ link scanners so I think I'm okay with erring on the side of under-reporting.

How do you handle clicks in terms of email program success status? That is, does "clicked" count as success?

Thank you for your input!

Best,

Denise

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Denise,

Depending on the program and audience (we see large pockets of auto-clicks with specific audience segments), we may use the person's web visit as the benchmark for program success. Many in this thread have shared that they see bots triggering web page views as well, but I've done a ton of testing with our audience and Marketo data, and I do not experience that within our instance at this time.

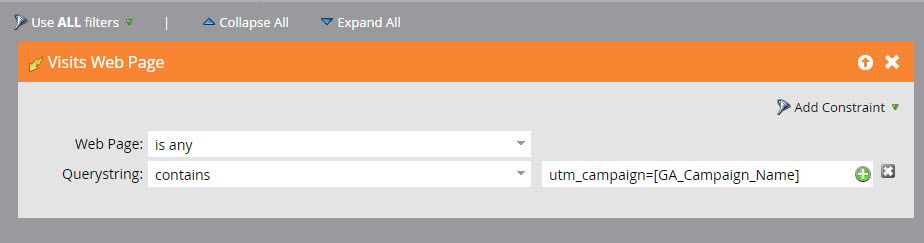

The way I do this on a program-by-program basis is by using a Visits Web Page trigger program using the querystring of page visits to the website. Since we tag all of our emails with Google Analytics tracking params, we basically just put that program's campaign ID into the querystring constraint, then any page visited to the site with that in the querystring triggers the program to set success. This way if you have 3 links in your email to different pages, the trigger is just looking for anyone landing on the site with your GA tags in place for that program. I'm also playing around with tokenizing by appending program.id to all links, then dynamically searching for that in my trigger but haven't built that out fully as of yet.

Couple things to note: 1) You will see less page visits than clicks. Every time. Just natural drop off of users quick bouncing combined with the bot click problem, that's normal. 2) Your experience with bots also triggering this page view may differ from mine. I've done lots of testing, and it appears to be fairly clean for the small group I use this approach with in our database as far as I can tell.

Good luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris,

Thanks so much for this useful information! In the case of the particular client I am working hardest with on this topic, unfortunately we don't have the option of using web page visits on many of the emails we are sending these days because they lead to other sites. But I'll bear this in mind, for sure!

Denise

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has anyone seen the numbers for Opens getting skewed as well? Since that number is tracked via an image/pixel, I'm surprised to not hear about skewing of that number.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good question. But remember that the mail scanners are interested in security (not privacy) risks. A 1x1 image wouldn't be a security concern (unlike for example a giant 800x600 image with spamvertising text on it, which would be worth OCRing). So to the degree that a scanner can predict final visual layout the pixel wouldn't be worth fetching.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, we haven't seen that behavior.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have seen a similar situation to this thread creeping in on our metrics.

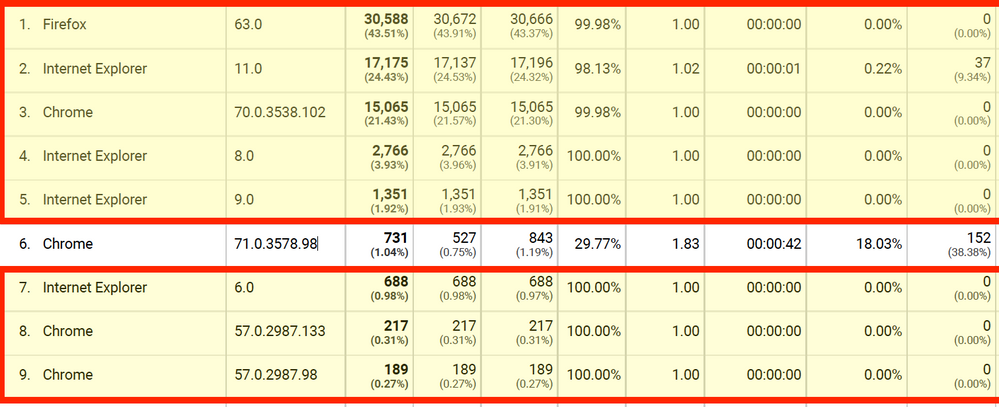

Recently, I decided to break down our email traffic by Browser and Browser version, in accordance with some helpful tips from this article.

Specifically, this part:

The most common fingerprint of a bot visit (within Google Analytics) is very low quality traffic – indicated by 100% New Sessions, 100% Bounce Rate, 1.00 Pages/Session, 00.00.00 Avg Session Duration, or all of the above.

The Criteria:

Looking at traffic using the criteria above (slight deviation in sessions vs new sessions OK), also checking out if they hit a goal completion or not. Traffic is from Q1'19

The Findings:

Of the 70K users/sessions, about 2K of them appear to have actual activity attached to them.

This would indicate about 2.85% of my email clicks were from email traffic. Put another way 97% of our email traffic was likely from these email scanners.

Image Snippet from GA report:

Conclusion:

We're still tackling exactly how to approach reporting of true email traffic, but one thing is for sure: all is not what it seems!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's quite helpful. We have estimated that 70-90% of our clicks are spam, which is hugely disappointing of course.

Sanford Whiteman - is it possible to take the Click Detail from the logs and put it into a table like the above? I've been somewhat suspicious that the Device and Browser Type would be a clue to a click scanner. I bet this is an API pull though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm sure this has been asked and answered, but I can't find it explicitly, so here goes:

Why can we not add a wait step? So the functionality would look like this:

Smart List: Email is Delivered

Flow:

- Wait 10 Seconds

- Request Campaign (Your normal email processing campaign)

This way you're not monitoring activity on the email until 10 seconds after delivery, which should exclude the majority of bot activity (but I understand not 100% of it).

Either way, if you deliver an email, track link clicks 10 seconds later. then lead them to a page with either an active form or a hyperlinked path, your stats should be relatively clean.

That's my (very top-level) hypothesis at least. Rather than taking the amount of time it would to test though, I figured I'd come to the experts and see if it's even worth my time. ![]()

Another side question I had was the function of the "Clicks Link." Is this an active tracker? OR will it pick up the bot click in retrospect? (rendering the 10 second wait step nearly useless, as everything above can be accomplished without it)

Thanks in advance!!!

Justin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Request Campaign (Your normal email processing campaign)

This way you're not monitoring activity on the email until 10 seconds after delivery, which should exclude the majority of bot activity

When you request your normal processing campaign, you now must use filters instead of triggers (since you're now using a "campaign is requested" trigger). So the 10 minute wait step has no impact whatsoever and your "normal" processing campaign is no longer a trigger campaign based on email activity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I didn't even get to that part. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Justin,

While I'm not an expert on bots, I very much doubt that you can count on "wait 10 seconds" to eliminate them for a couple of reasons: 1) I doubt you can count on the precise timing of bot clicks vis-a-vis when Marketo deems the email has been delivered; the bot may "click" the links more than 10 seconds later. And 2) the wait step means your campaign will run at low priority, so the flow steps after the wait stepcould be run a lot longer 10 seconds after the email was delivered, depending upon what higher priority campaigns are active at the time.

"Clicks Link" as a trigger (in orange) reacts as soon as it sees the link clicked.

"Clicked Link" is a filter that looks for past activity. You can control how far in the past with constraints (e.g., Clicked Link in past 5 minutes, 30 days, etc.).

Denise

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Exactly, there's nowhere near 10s granularity available here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes we were recently affected by bot activity that hijacked our Marketo form. Fortunately, the bot was using the same IP each time so I set a flow step to block the activity based on IP.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Raj Jain the "bot" being discussed here is an automated scanner that pre-follows links for security purposes, not a malicious bot.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices