- Marketing Nation

- :

- Products

- :

- Blogs

- :

- Product Blogs

- :

Scheduled Program Cloning Via API

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fingers cramping up from all the clicking you are doing to clone that boatload of programs? Learn how you can automate the cloning of programs using the API and Zapier according to monthly, quarterly, or yearly cadences.

I am guessing that your company, like my own, likes to sync programs to Salesforce campaigns to enable campaign influence reporting in Salesforce. One reason you might want to periodically clone is to get greater resolution into campaign tracking to improve your multi-touch attribution modeling.

Due to the way that programs are set up, once someone becomes a member and gets added to a campaign in Salesforce if they do not change status we have no way of knowing if they interacted with that campaign again.

For example, if you have an attribution campaign that tracks visits to your website from Google and puts people in the visited status in the "Organic Google" program then they will be added to the corresponding Salesforce campaign in the visited status.

Now, what if this person visits your website again next month? Well, they are already in the visited status in the "Organic Google" Salesforce campaign and so when it comes to campaign influence and multi-touch attribution it is as if this person never came back to your site.

So what can we do to get greater resolution? .... You guessed it: Periodic cloning.

Now, if we were to clone the "Organic Google" program once every month then we can capture a new touchpoint for a person in this campaign every month. If they visit twice in a month then, yes, we will not be able to track this touchpoint so it is up to you what balance you want to strike between the resolution you want in your campaign influence reporting and how often you want to clone programs.

Even if you do not sync your programs to Salesforce you might still be interested in periodic cloning so that the number of program members in your programs does not become too large.

N.B. While I can show you how to automate cloning using the API, there is no API request available that will let you sync a program to a Salesforce campaign so unfortunately there will still be some manual clicking necessary to sync all the newly cloned programs to Salesforce campaigns.

Options for Cloning in Bulk

Regardless of your reason for wanting to clone programs (there is no judgment here!) there are 2 ways you can go about cloning programs in bulk:

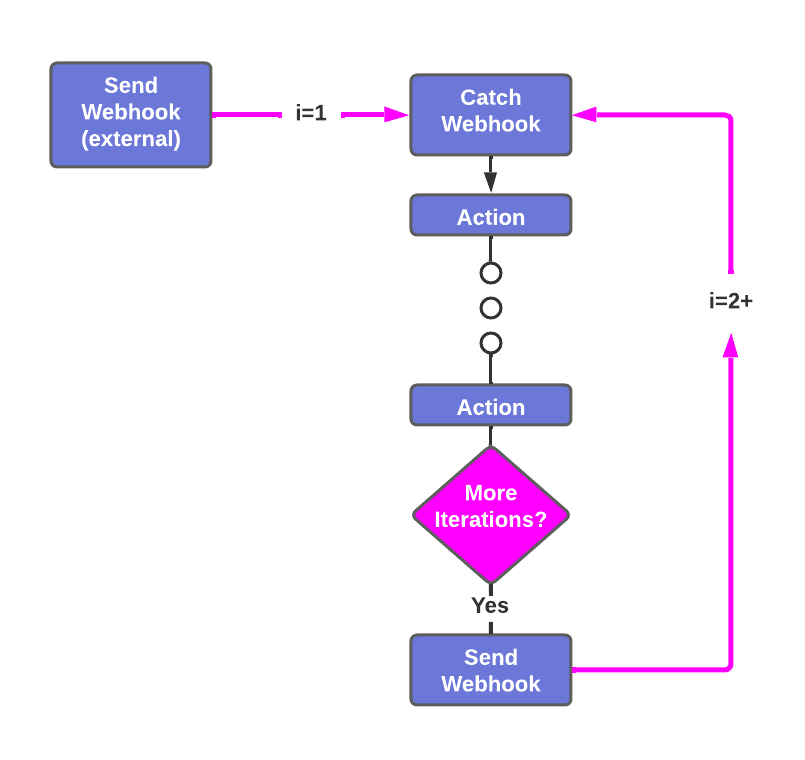

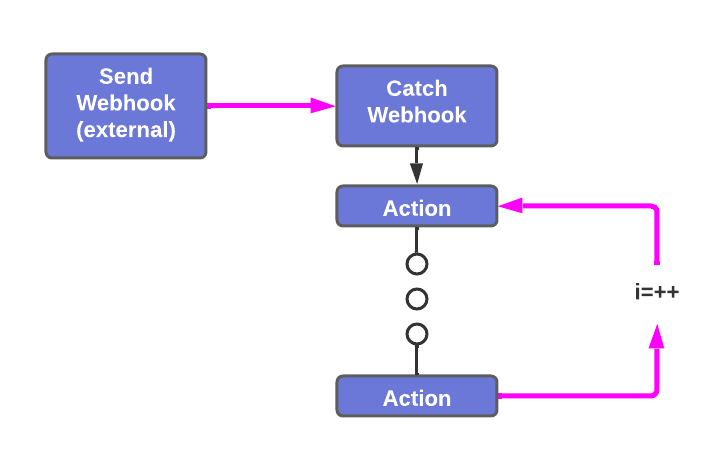

- You can bookend your Zap with webhooks and use these webhooks to create an iterative loop to clone all your programs (see the "Looping with Webhooks" section below)

- You can use the inbuilt "Looping by Zapier" action to clone all your programs (see the "Looping by Zapier" section below)

Why would you choose one over the other?

The "Looping by Zapier" action is easier to use but it is limited to 500 iterations and you have to use a hack in order to get the iterations to execute sequentially and avoid API limits . On the other hand you can loop as much as you want with the webhook method and you are guaranteed that the iterations will execute sequentially with no fear of exceeding the REST API limits.

Before diving into these more complex examples where looping is needed, let's start with a simple example without looping just to get you comfortable making cloning API requests.

Since this will be an API heavy post, I recommend checking out the Quick-Start Guide to the REST API if it is your first time using the API or you need a quick refresher. It will show you how to make your first API requests in Postman before transitioning to making requests in code or in the Zapier automation tool.

Also if you are looking for a course for learning the Marketo API then DM me 🙂

Cloning Simple (No Looping)

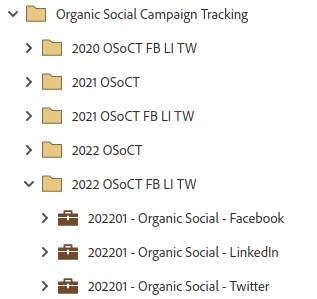

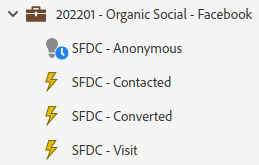

In this example, we want to clone 3 programs responsible for tracking visits from Facebook, LinkedIn, and Twitter using UTM parameters and setting a person's first and last touch attribution fields accordingly (see the UTM Tracking & Automation post to learn how the programs and their smart campaigns are set up to achieve this).

N.B. All the Python code used in the "Code by Zapier" actions below can be found in the organic_social_campaign_creation repository in Github.

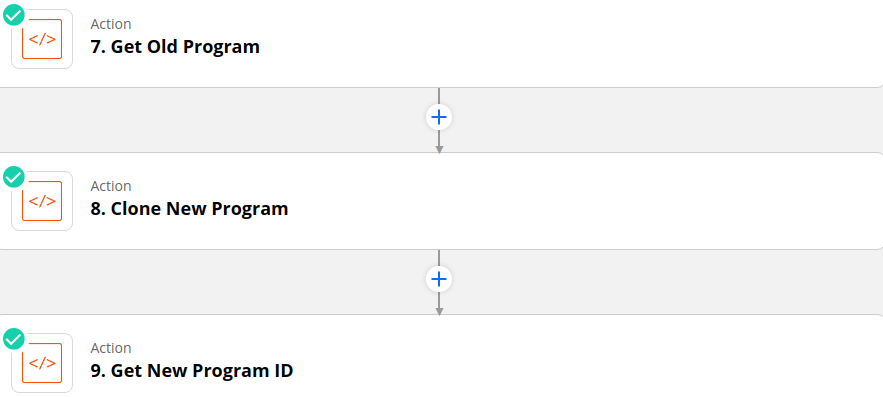

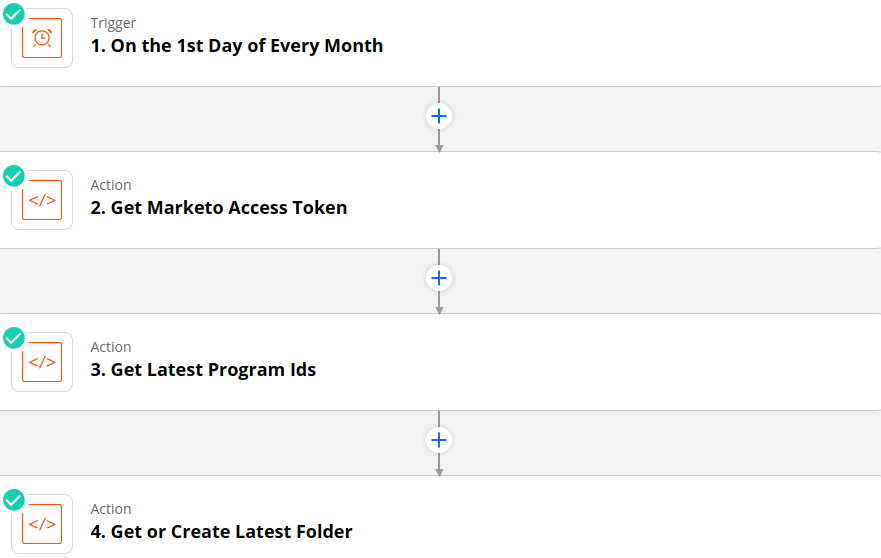

On the first day of every month at midnight, this zap will run and:

- Get the access token that is needed to make all subsequent API requests (get_marketo_access_token.py)

- Since each program name follows the same structure i.e.

YYYYMM - Organic Social - Platform, we can get the program ids for last month's programs by using a "for loop" and the Get Program by Name endpoint (get_latest_program_ids.py) - If it is the first time that this zap has run this year i.e. every January, then a new "YYYY OSoCT FB LI TW" folder will be created to house the constituent programs. Otherwise, the folder already exists and the id of this folder will be returned (get_or_create_latest_folder.py).

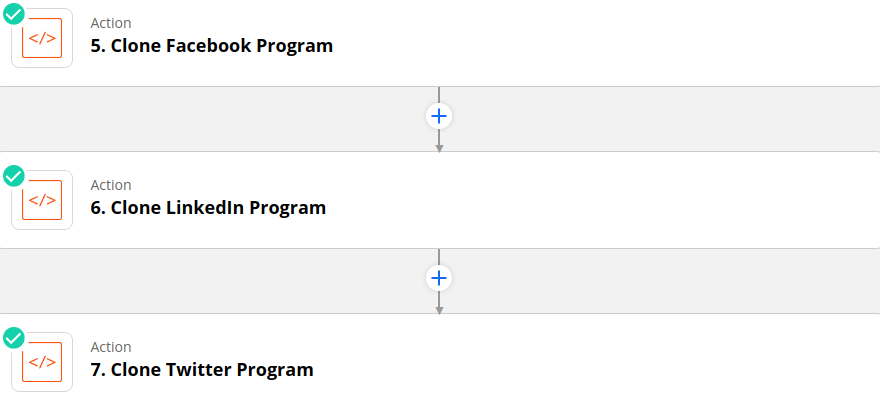

Next, the old program ids from Step 3 are used in requests to the cloning endpoint to clone these 3 programs into the parent folder obtained from Step 4.

Since each Zapier action has a 10-second timeout limit and cloning a program using the API takes several seconds, I recommend that you separate the cloning of these programs into their own actions instead of grouping them all together in a single action (I learned the hard way so trust me haha).

When making the cloning API request the description of each program is set equal to the UTM parameters used for this platform for the current month i.e. utm_source=organic_social&utm_medium=platform&utm_campaign=rc_yyyy_mm for the sake of convenience.

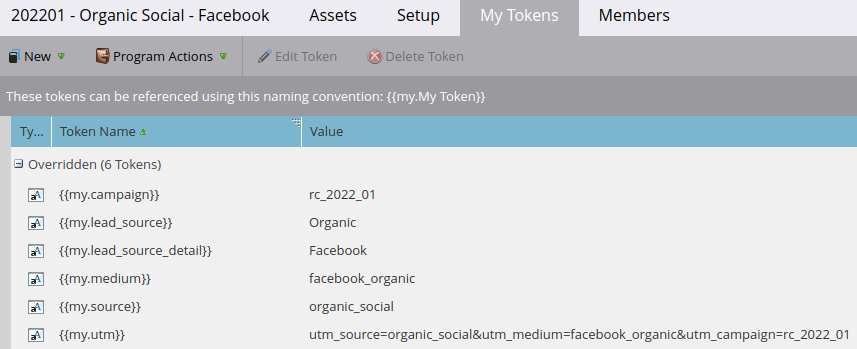

The smart campaigns within a program use the local "My Tokens" of the program in order to set the values of attribution fields (see the UTM Tracking & Automation post to learn how the smart campaigns are set up to achieve this). As can be seen from the image below, only the {{my.campaign}} and {{my.utm}} tokens need to be updated when the program is cloned each month since they are date-dependent.

In Step 8, nested "for loops" are used along with the Tokens Endpoint to loop through each program and update the {{my.campaign}} and {{my.utm}} tokens (update_program_tokens.py).

Finally, in the last 2 steps, the smart campaign ids within the programs are obtained so that their descriptions can be updated with the UTM parameters that are being tracked for each platform. Since the smart campaigns use these parameters in their smart lists, pasting these parameters into the description makes it easier, later on, to copy the parameters straight from the description into the smart list (get_smart_campaign_ids.py & update_smart_campaign_descriptions.py).

Setting Cloning Frequency

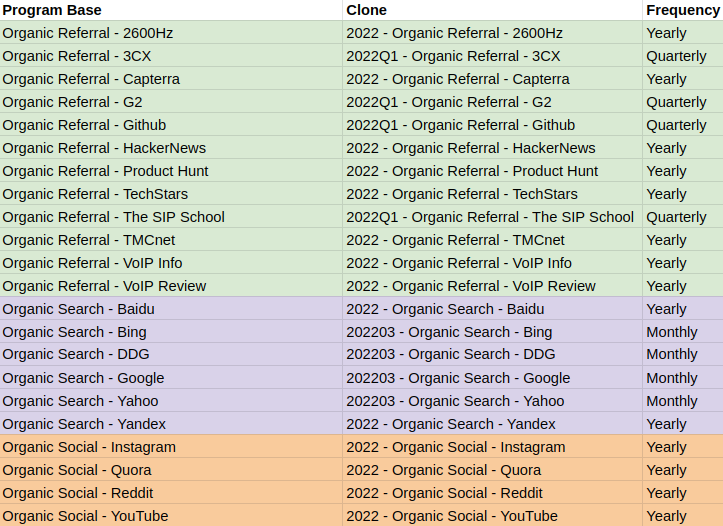

The above workflow for cloning the 3 social media programs is straightforward but what if you want to clone 10's or 100's of programs and what if some programs need to be cloned quarterly or yearly rather than monthly?

This is where we need to introduce looping in Zapier along with a Google sheet and Google Scripts that are used to schedule when programs should be cloned.

Evergreen Cloning

The Evergreen Programs tab of the Google Sheet contains a list of evergreen programs i.e. they are going to be running for the foreseeable future, along with the frequency at which they need to be cloned. Once the new version of a program has been cloned the zap will use the Program Base column to look up the row for which it needs to update the Clone column to the name of the newly cloned program.

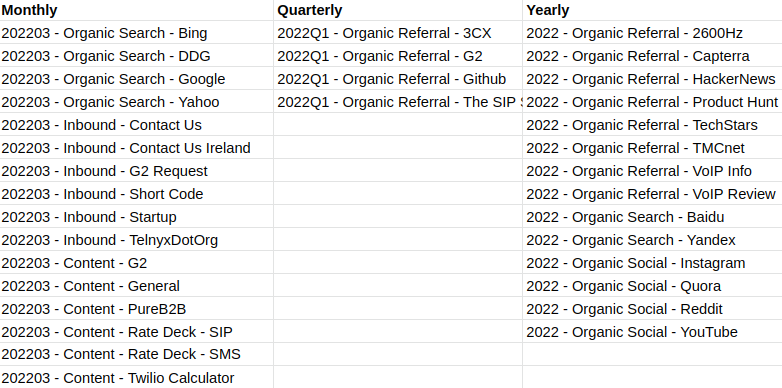

A simple Google sheet filter formula is then used to group all the evergreen programs together into either the "Monthly", "Quarterly", or "Yearly" columns.

Then at the start of every month, 3 Google scripts run to concatenate the program names in each column and then put the concatenated string in the corresponding "Monthly Programs", "Quarterly Programs", or "Yearly Programs" columns in the Evergreen Submissions tab in the first available empty row.

- The setMonthlyValues.gs script will populate the "Monthly Programs" cell every month and send a webhook to trigger the "Monthly Cloning" zap which then iterates through each program in the "Monthly Programs" concatendated string and clones a new version of the program for the new month.

- Although the setQuarterlyValues.gs script runs every month, an if statement ensures that it only populates the "Quarterly Programs" cell and sends a webhook to trigger the "Quarterly Cloning" zap when it is a new quarter. The zap then iterates through each program in the "Quarterly Programs" concatenated string and clones a new version of the program for the new quarter.

- Although the setYearlyValues.gs script runs every month, an if statement ensures that it only populates the "Yearly Programs" cell and sends a webhook to trigger the "Yearly Cloning" zap when it is a new year. The zap then iterates through each program in the "Yearly Programs" concatenated string and clones a new version of the program for the new year.

The three aforementioned scripts are scheduled to run at 2-3am, 3-4am, and 4-5am respectively on the 1st day of every month. The scripts are scheduled apart to avoid exceeding concurrent API call limits.

N.B. If you want to learn about how to trigger a Zap using a Google Script then DM me for a link to the relevant blog post 🙂

Paid Cloning

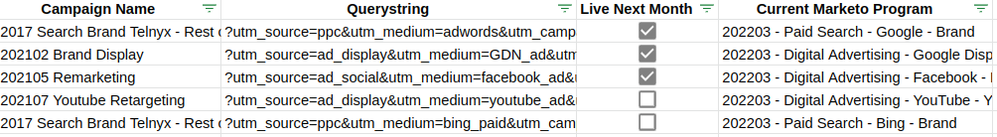

Contrary to the evergreen programs, the Paid Programs might not need to be cloned again. For example, if an ad campaign is underperforming and is being turned off at the end of the month then there is no need to clone the corresponding program next month.

As a result, the "Live Next Month" checkbox column needs to be updated on the last day of the current month to indicate all the programs that need to be cloned for the upcoming month.

The setMonthlyValuesPaid.gs script then runs at 1-2am on the 1st day of every month to concatenate all the program names marked as "Live Next Month" and then store them in the first empty row in the Paid Submissions tab in the "Monthly Programs" column.

The webhook sent by this Google Script then triggers the "Monthly Paid Cloning" zap to iterate through the program names in the concatenated string and clone new versions of these programs.

N.B. The reason the script is set to run at 1-2am instead of 12-1am is because there were issues in the past with clocks going back an hour causing this script to run on the last day of the previous month instead of the 1st day of the current month.

Again this script is set to run before any of the other aforementioned Google scripts to avoid exceeding concurrent API call limits and because we want to set up attribution tracking for our paid campaigns first since we are putting money into getting these leads.

Cloning with Looping

Once either of the 4 Cloning zaps has been triggered by receiving a webhook from a Google script then there are 2 ways to loop through the programs that need cloning.

Looping with Webhooks

N.B. The code for each zap can be found in the corresponding Github repositories.

- Monthly Cloning: monthly_attribution_campaign_cloning

- Monthly Paid Cloning: monthly_attribution_paid_campaign_cloning

- Quarterly Cloning: quarterly_attribution_campaign_cloning

- Yearly Cloning: yearly_attribution_campaign_cloning

// Google Script code used to send a webhook to Zapier

var url = "https://hooks.zapier.com/hooks/catch/###/xxx/";

var options = {

"method": "post",

"headers": {},

"payload": {

"Timestamp": ts,

"Index": '0'

}

};

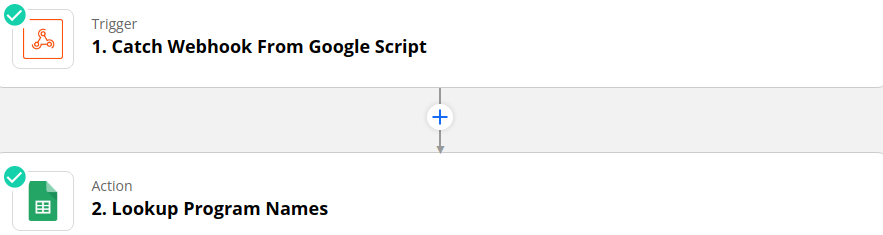

The inbound webhook from the Google Script contains the timestamp needed in action #2 to look up the latest row in the Evergreen Submissions or Paid Submissions Google Sheets tab containing the monthly, quarterly, and/or yearly program names. This webhook also contains a value of 0 for the index field which is used to keep track of loop iterations.

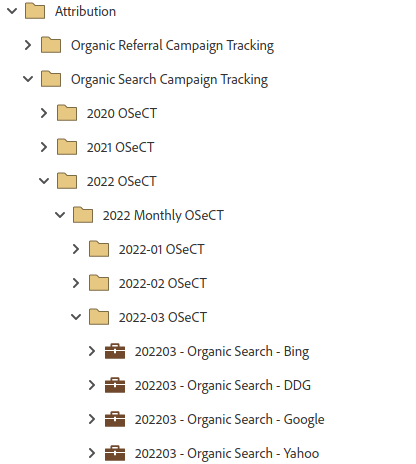

The parent folders (Level 1) for each attribution channel are already known i.e. Organic Search Campaign Tracking, Organic Social Campaign Tracking, Organic Referral Campaign Tracking, and Paid Campaign Tracking.

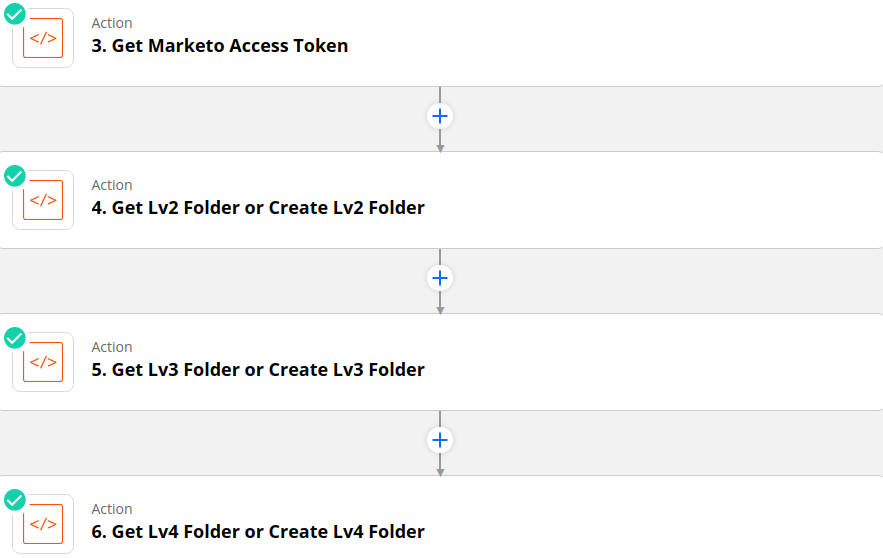

Therefore once the access token has been obtained, there are 3 subsequent API requests to find the Level 2 folder for the year then the Level 3 folder containing all the monthly folders, and finally the level 4 folder for the current month. If any of these folders do not already exist then there is a fallback in the Python code that will create the folder.

The code in the "Get Lv2 Folder or Create Lv2 Folder" action also:

- Uses the

Indexvalue provided from the webhook to determine which program from the array of programs needs to be cloned e.g. if it is the 5th iteration of the loop then the index will be 4 (since Python array indices start at 0) and the 5th program in the list at index 4 will be cloned. - The value of

Indexis incremented and if the index is still less than the number of programs in the array then theMoreboolean flag is set toTrueso the zap passes through the filter at the end of the zap so another webhook will be sent to restart the zap with this incremented index.

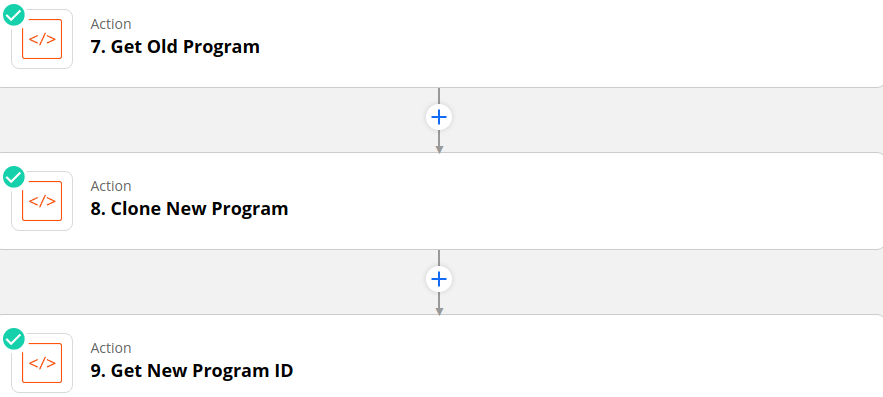

- The "Get Old Program" step takes the program name to be cloned from the "Get Lv2 Folder or Create Lv2 Folder" action and obtains the program id.

- This old program is then cloned into the folder designated in the "Get Lv4 Folder or Create Lv4 Folder" action and named for the current year and month e.g. YYYYMM - Organic Search - Google.

Although the "Clone New Program" step should return the id of the newly cloned program, oftentimes I found that Marketo would not return a successful response to Zapier before the 10-second timeout limit. Consequently, this step might be marked as failed in Zapier even though the program was successfully cloned.

Therefore, a redundancy step is introduced where the newly cloned program id is obtained by searching for the new program by name e.g. YYYYMM - Organic Search - Google. Then all subsequent steps use the id obtained from this action (in Step 9) rather than the cloning action ( in Step 8 ) so that if Step 8 is marked as failed the rest of the zap will be able to continue.

- All the ids for the smart campaigns that exist in the newly cloned program are obtained.

- Then a for loop is used to cycle through the smart campaign ids in the new program and either schedule them or activate them based on the name of the campaign.

- All the ids for the smart campaigns that exist in the old program are obtained.

- Then a for loop is used to cycle through the smart campaign ids in the old program and either delete them (batch campaigns schedules cannot be turned off using the API) or activate them based on the name of the campaign.

- The row containg the old program that was cloned is looked up in either the Evergreen Programs or the Paid Programs tabs.

- Then the name of the program is updated to the newly cloned program's name so that the next time the zap runs it will clone the most recent program.

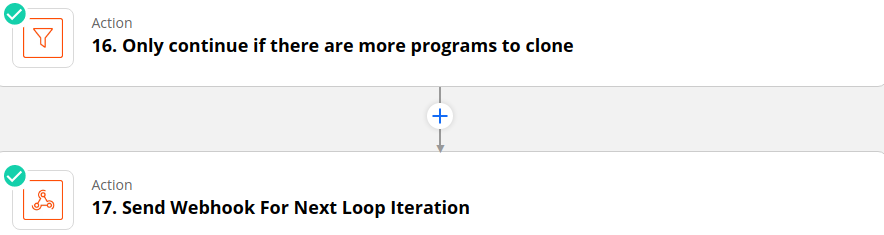

- If the

Moreboolean flag set in the "Get Lv2 Folder or Create Lv2 Folder" action is set toTruethen zap will progress to send a webhook with the incremented index value to restart the zap at the next loop iteration. - Otherwise, if

Moreis set toFalsethen the zap stops at the filter and the loop terminates meaning that all programs have been cloned.

Looping by Zapier

// Google Script code used to send a webhook to Zapier

var url = "https://hooks.zapier.com/hooks/catch/###/xxx/";

var options = {

"method": "post",

"headers": {},

"payload": {

"Timestamp": ts

}

};

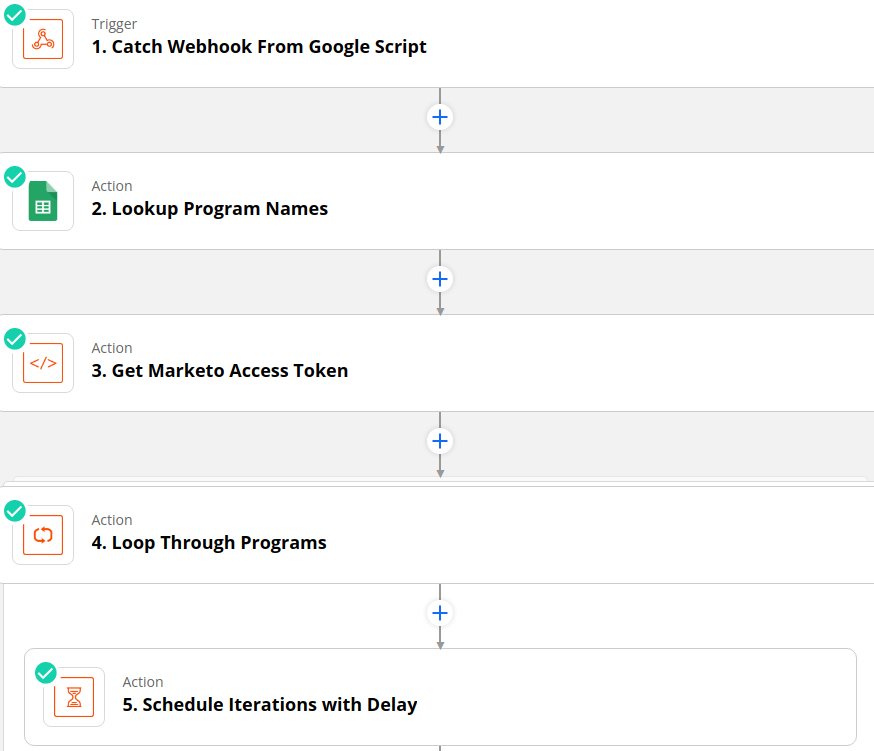

Looking at the Google Script code above, you can see that this time, only a timestamp is sent to Zapier because the "Looping by Zapier" action will handle the tracking of loop iterations.

Again this timestamp is used to look up the latest row in the Evergreen Submissions or Paid Submissions Google Sheets tab containing the monthly, quarterly, and/or yearly program names. Then the access token is obtained before the "Looping by Zapier" action is started.

Notice that these first 2 actions are outside the looping action meaning they will only be executed once. You could move the access token action inside the looping action so that the token is requested at the start of every iteration. This will ensure that the limited token life will not expire in the middle of the loop executing.

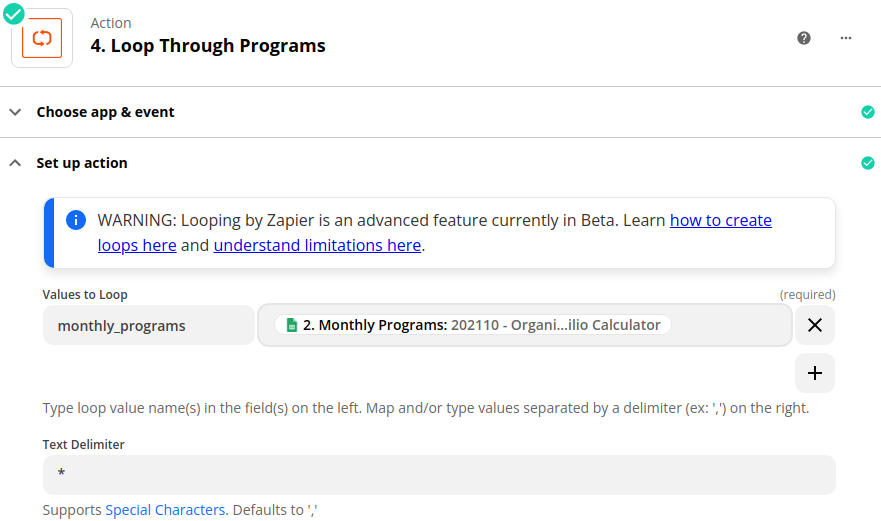

The "Create Loop From Text" option with the * text delimiter is used to split the array of program names from Google Sheets so that each iteration of the loop will clone one of the programs.

If you want to learn more about how the "Create Loop From Text" action works and how to trigger it using a Google Script then DM me to get a link to the relevant blog post 🙂

It is worth noting here that the "Looping by Zapier" action does not carry out the loop iterations sequentially. Instead, the loop action executes the iterations simultaneously and you cannot be guaranteed that the program at index 0 in the array will be cloned before the program at index N in the array. This then presents issues with API limits of having a maximum of 10 concurrent API calls and a maximum of 100 calls in 20 seconds.

Far from ideal!

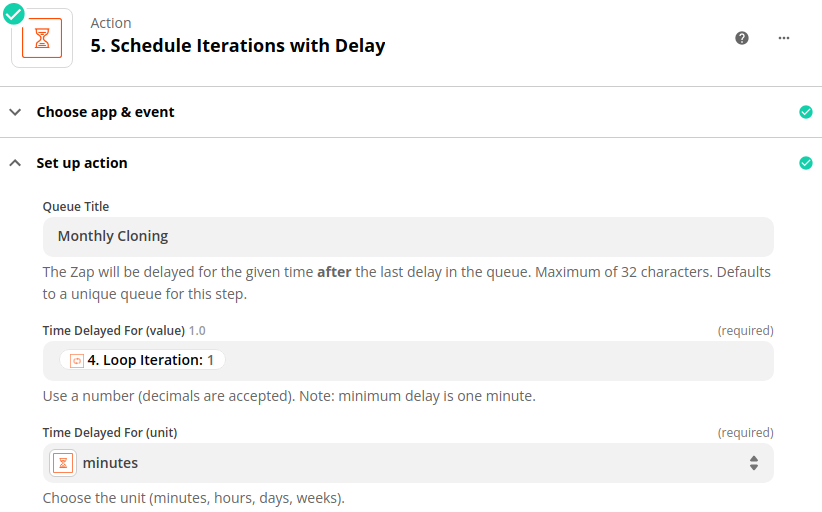

However, thanks to some helpful folks in the Zapier forum I found out that using the loop iteration number in the "Delay After Queue" action will mean that each successive iteration will be further delayed than the previous iteration i.e. the first iteration will be delayed for 1 minute before executing and the 2nd iteration will be delayed for 2 minutes before executing.

Therefore, we can get the iterations to execute sequentially as desired. It might take some experimentation to find the correct delay between iterations which is long enough so that one iteration is completely finished before the next one starts.

As shown in the video walkthrough all the remaining actions in this "Looping by Zapier" zap are identical to those in the webhook looping zap. The only difference is that there is no filter or send webhook action at the end of the zap because these are not needed to restart the loop since this is now all being handled by Zapier.

Cloning Follow-Ups

If you have not done so already I recommend checking out these 2 posts on UTM tracking and anonymous leads so that you can set attribution correctly:

Finally, if you want to see what other cool stuff you can automate with the API then take a look at the following posts

- Design Studio Upload Via API

- Email Process Automation Using the API & Zapier

- Merging Leads in Bulk using the API

The content in this blog has been reviewed by the Community Manager to ensure that it is following Marketing Nation Community guidelines. If you have concerns or questions, please reach out to @Jon_Chen or comment down below.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices