How do you track and grow your A/B testing strategy?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We do a ton of A/B testing but with no long term strategy beyond - let's just improve conversion rates - and our tracking (practically non-existent) and ability to learn from our testing could be much improved. How do you organize your results to ensure your a/b testing strategy is moving forward in a positive direction? Is it as simple as an excel file? Should we be tracking with UTM? Any tips and tricks you've implemented would be very helpful! Thank you.

- Labels:

-

Reports & Analytics

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

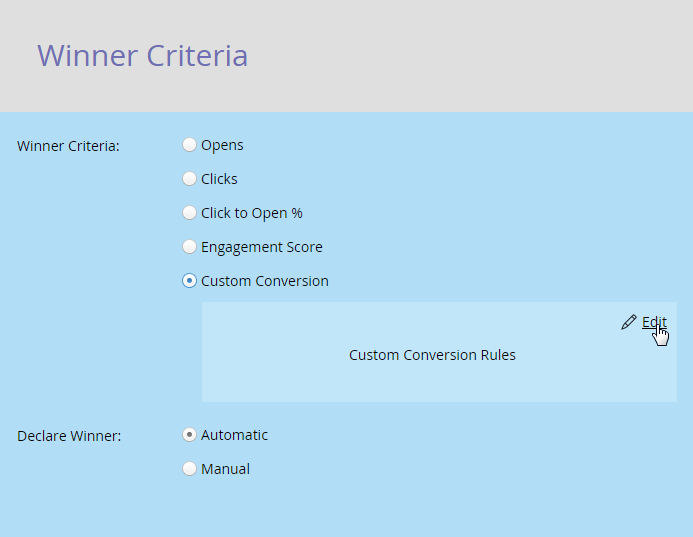

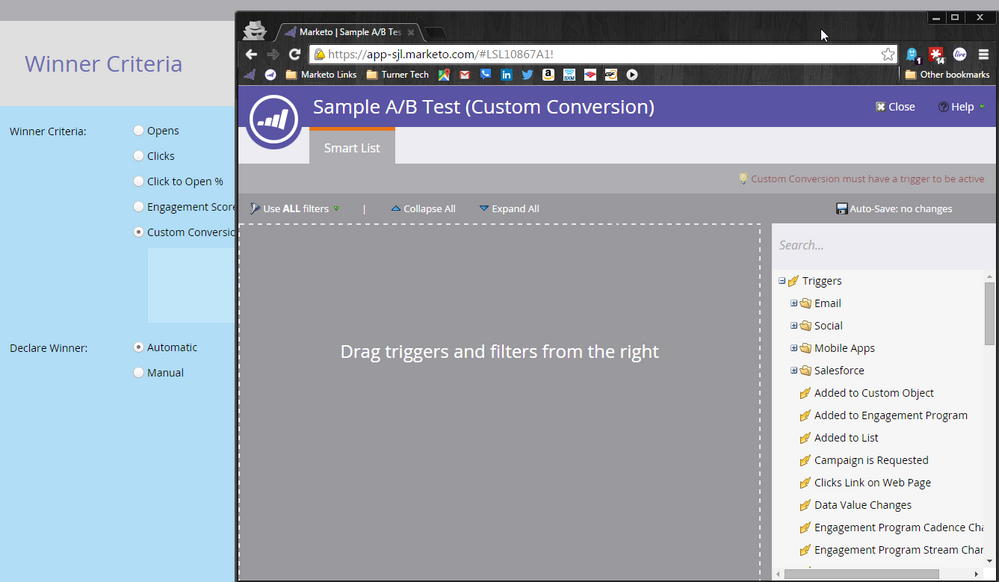

Hey Lauren - I'm a Marketo SC, and was researching common reporting/analytics needs as I saw your question. What may help is to more clearly define - what is success for this email? - and then setup the Testing Results to listen for the behavior specific to that type of Campaign, and how your leads react. You could use UTM parameters, or other hidden form field captured data, but the idea is to first get comfortable with our "Custom Conversion" setting for Winner Criteria. Have you seen this before?

This comes from asking yourself "How do I define success for your campaigns?" - for example, what are scenarios are you testing emails for? Are they Case Study/Whitepaper content promotions, and you are looking for downloads of that Whitepaper link from the website? Or are they invites to events, in which case you want to look for how many Event Registrations/form fills each test email got you?

Many people get lost in "conversions" which becomes ambiguous to the varieties of ways your Leads/Customers interact with each Channel you employ in your marketing strategy. Don't just track "conversion to lead" as the end point, because between that initial email send and a "lead conversion" you could still be getting thru to people, but not realizing it because the metric you are after doesn't accurately demonstrate the reality of the result. I spoke to a customer recently who launched an Online Ad in popular Online Radio apps like Spotify and Pandora, and perceived that they got a huge reaction to it because their concept of a "Conversion" was simply getting clicks on the ad. What they didn't initially realize until using Maketo analytics, that at least 50% of the clicks were "bounces" from the site, meaning they clicked the ad then immediately left the site - which implies accidental clicks or no interest. If they looked merely at clicks, the campaign appears to run great, but if you go down to "what really means success?" the picture is quite different.

See below, in the A/B test interface, select Custom Conversion and then you can use Triggers/Filters to more precisely define "What activity do I listen for to tell me which email performed better?" I.E., for an event, use the "Fills Out Form" trigger on the Registration Form for that event, and judge your tests by which ones bring about more Form Fills for the event.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are tons of places on the web to learn how to do this better.

I would just setup an excel sheet to monitor your results with the appropriate columns, dates, and names. Also links to the emails or campaigns.

- Sent

- Clicks

- Delivery %

- Name of Email

- Item tested

- Link to Email/Asset

- Test Code: A or B or C

- Sample Size or %

- Length of Test period

- Confidence % or Statistical Validity

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, Josh!

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices