- Marketing Nation

- :

- Products

- :

- Blogs

- :

- Product Blogs

- :

Quick-Start Guide to the Bulk Extract API

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ever made a big MOOPs where a field value was incorrectly changed to the wrong value and you want to change it back to the original? Well the Bulk Extract API, Postman, and Google Sheets can help you fix it!

Before we get started here take a look at the Quick Start Guide to the REST API to see how to set up your environment and variables in Postman and how to make your first GET & POST requests. DM me if you want to receive a copy of the Marketo API Postman Collection that I created, which contains 100+ pre-configured API requests to get you started 🙂

Also if you are looking for a course for learning the Marketo API then DM me 🙂

Have you ever wondered how business intelligence tools like Domo, PowerBI, and Tableau pull in the plethora of lead and activity data from your instance? You guessed it! The Bulk API and in particular the Bulk Extract API.

The Bulk API is a powerful means to import or export large amounts of data into or from your instance for leads, activities, custom objects, and program members. In this post, we will be focusing on the bulk extract API, however, if you are interested in the bulk import API then still tune in because the setup and job flow for the bulk import API are very similar and all the Postman steps below will still be very useful to you.

The Bulk extract API works with a queue system to extract information from an instance, where new jobs are added to the end of a queue and will be completed after the jobs already in the queue are completed i.e. first in - first completed.

Every Bulk Extract API flow can be broken down as follows:

- Create a job

- Put the job in the queue

- Query the job status periodically until the status is marked as complete

- Extract the job data

Bulk Activity Extract API Job Flow

We will be using the all-too-familiar example of needing to revert a field back to a previous value for a large number of leads because it was accidentally changed (I know we've all been there!). I'm sure if you have ever manually had to go through the activity logs to see what the previous value was for each lead in a list and manually update it, you will be bouncing up and down with excitement once you see how the bulk extract API can make this a breeze!

The TLDR summary of the scenario described in the YouTube video above is that the "Behavior Score - 7 Day History" field in our instance was incorrectly changed for a lot of people by the "Trim BS7DH" webhook.

Therefore we want to create a job to extract the activity data for the "Change Data Value" activity for the "Behavior Score - 7 Day History" field in the time window that we know the campaign ran.

Finding Activity IDs

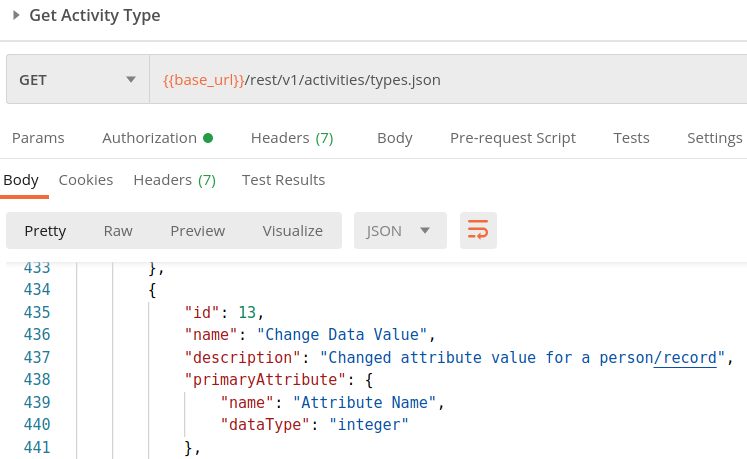

To find the activity id for the "Change Data Value" activity, which we will need when creating the job, we need to make a GET request to the Activity Types endpoint and search the response returned to Postman.

Finding Field IDs

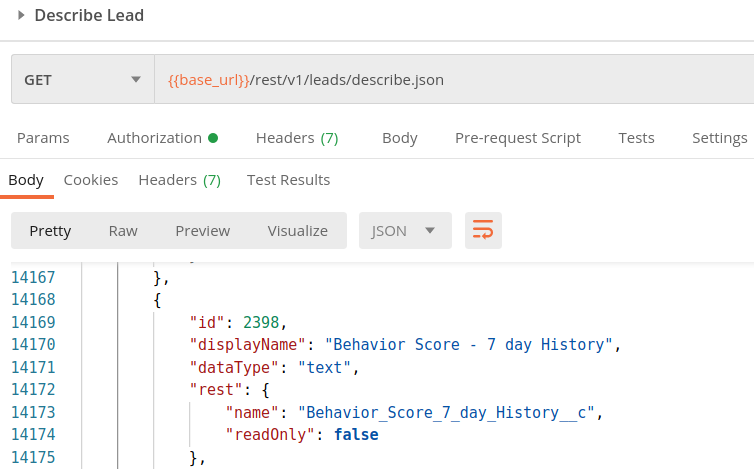

To find the field id for the "Behavior Score - 7 Day History" field, which we will need when creating the job, we need to make a GET request to the Describe Lead endpoint and search the response returned to Postman.

Creating a Job

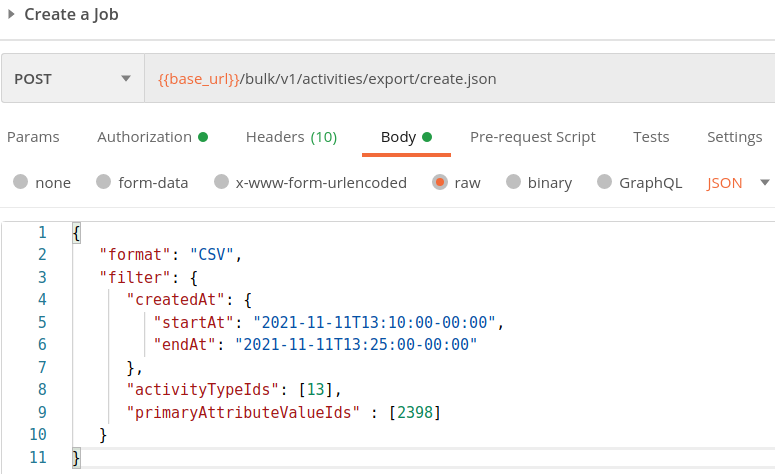

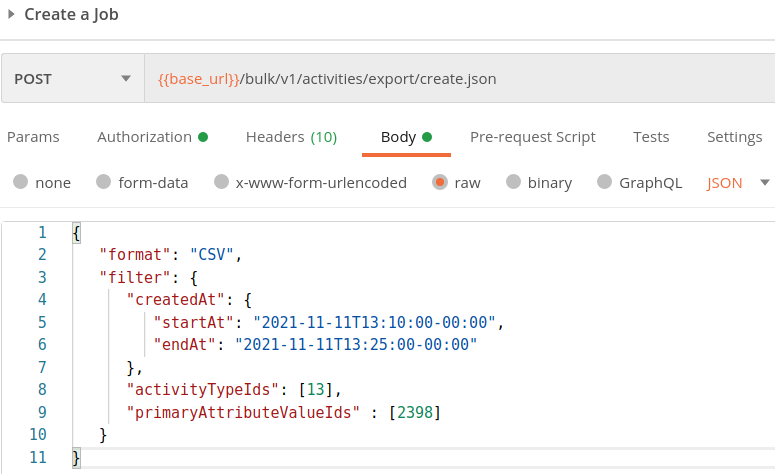

When creating a bulk activity extract job using the Create Job endpoint, the body of the request needs to contain the starting time point and ending time point for the time window you are interested in (the maximum span is 31 days, see the "Bulk API Limits" section below) along with the activity ids that you are interested in and if applicable you can filter even further by specifying field ids.

If a smart campaign was responsible for changing the field you want to revert to an original value then you can narrow down the time range using the "Results" tab of the smart campaign and using a view filter to only select the activity of interest (see the YouTube video above for a visual walkthrough of this).

N.B. The timestamps that you use for defining the time window must be in UTC time.

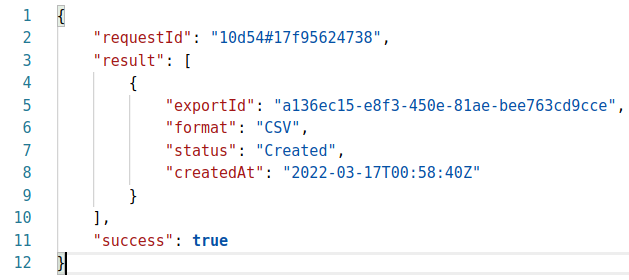

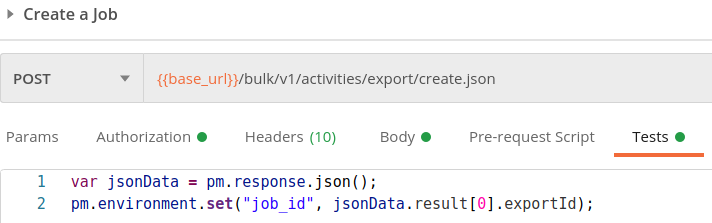

The response to this "create job" request then contains the job id in the "exportId" field. Since we will be using this job id in all subsequent bulk extract API requests we can create a new "job_id" variable in our Postman environment and automatically populate this variable by placing the code below in the "Tests" tab of the request.

var jsonData = pm.response.json();

pm.environment.set("job_id", jsonData.result[0].exportId); Code used to automatically populate the job_id variable

Code used to automatically populate the job_id variable

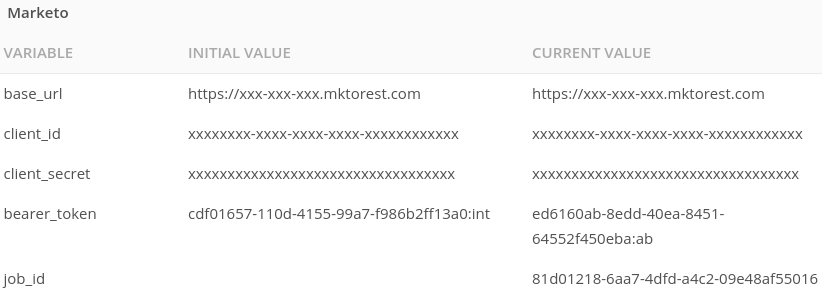

Postman environment containing the populated job_id variable

Postman environment containing the populated job_id variable

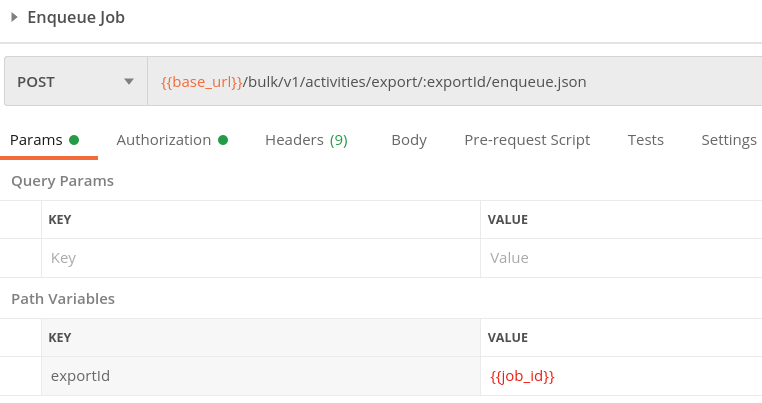

Queueing a Job

Once the job has been created the next step is to put the job in the queue (see job and queue limits in the "Bulk API Limits" section below) using the Enqueue Job endpoint, which uses the job_id environment variable we populated using the test code above.

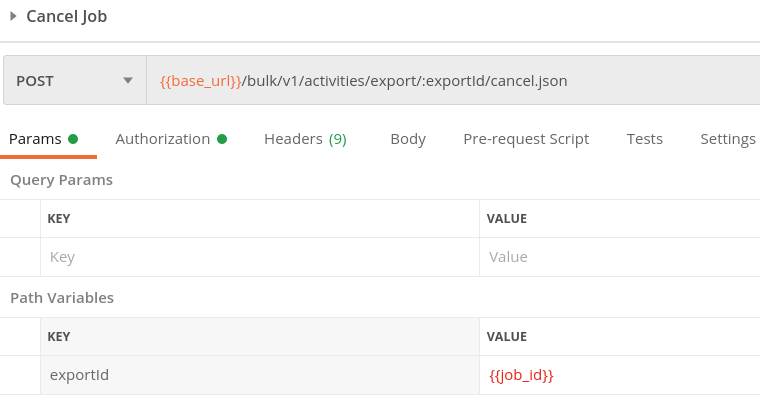

Canceling a Job

If for some reason you made a mistake and need to cancel a job then you can use the Cancel Job endpoint to do so.

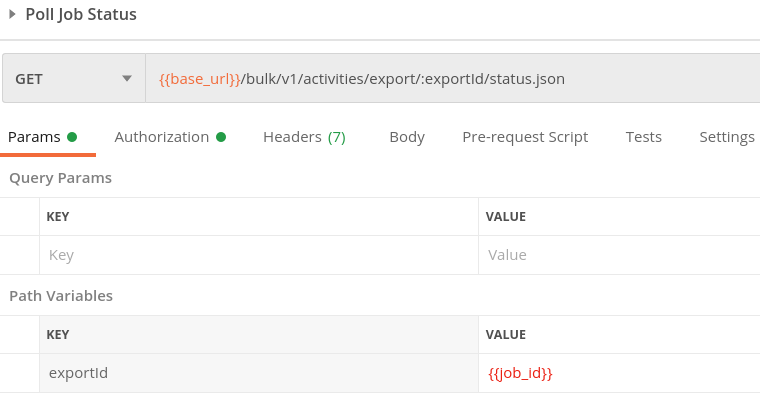

Querying Job Status

Next, you need to intermittently query the status of the job until the "status" field in the response says "completed". Notice that the returned response also includes the file size in bytes of the data contained within the job.

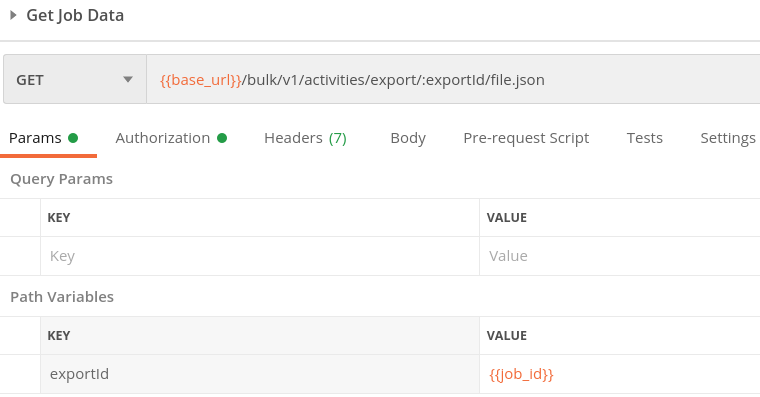

Extracting Job Data

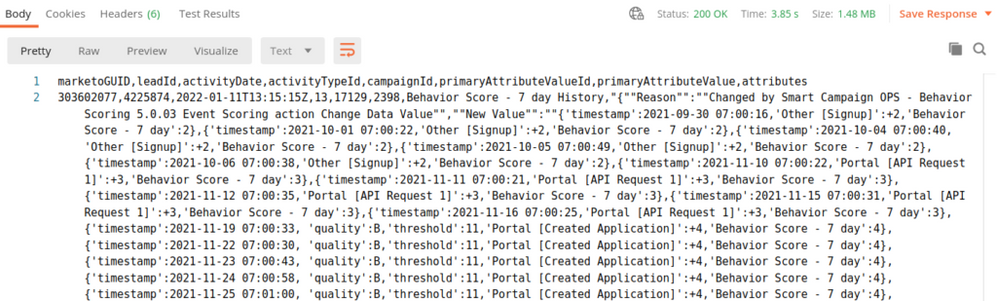

Finally, you are ready to extract the job data and save the response to a CSV file that can be imported into Google Sheets so that the previous values for the field of interest can be extracted using Google Sheets formulas.

Extracting Previous Values Using Google Sheets

When you import the job data into Google Sheets you will end up with a tab similar to the Bulk Extract Data tab in the "Bulk API Extract Change Data Value" workbook.

Next, the "New Value" and "Old Value" values can be obtained by parsing the "attributes" column using the regexextract function as shown below.

New Value = REGEXEXTRACT(attributes_value,"""New Value"":""(.*)"",""Old Value""")

Old Value = REGEXEXTRACT(attributes_value,"""Old Value"":""(.*)"",""Source")

Then in the example that I mention in the YouTube video, I wanted to identify all the people who had their "Behavior Score - 7 Day History" field incorrectly truncated by getting the length of the "Old Value" rows and filtering on those less than 5000 characters in length.

Once the affected people had been identified, the vlookup function was then used to pull in their email address because when importing lists into your instance you cannot use the ID as the identifier, you need to use the email address instead.

As the "attributes" column shows, the "Reason" for the change data value action, in this case, was marked as "Webhook Updated Lead : Trim BS7DH" so we can create a Smart List using this reason and the activity date to obtain a 2 column list of people affected, containing each person's ID and Email Address. We can then import this information into the ID-Email Mapping tab and use them in our vlookup formula.

Then we can copy the "Email Address" and "Old Value" columns for the filtered rows into the Export tab, export this list, and then finally import this list to revert these people's "Behavior Score - 7 Day History" fields back to their original value.

What's Next?

Now that you are well warmed up with using the bulk extract API take a look at how you can use the API to automate and streamline different workflows:

- Bulk Merge Duplicates according to your own custom rules

- Streamline your email creation process using Google Sheets templates and Zapier

- Automate your UTM creation and tracking using Google Forms, Google Sheets, and Zapier

Bulk API Limits

Bulk Extract

- You are only allowed to have 10 jobs in the queue at once. Note that this queue is shared between the leads, activities, custom objects, and program member bulk APIs.

- Only 2 jobs can be in the "Processing" state at any one time

- The total amount of data that you can export is limited to 500MB per day unless you have purchased a higher data limit. This 500MB limit resets daily at 12:00AM CST.

- The data extracted from a job will only be retained for 10 days

- When using the "createdAt" or "updatedAt" values to define a time window for your request the maximum width of this interval is 31 days

Bulk Import

- You are only allowed to have 10 jobs in the queue at once

- Only 2 jobs can be in the "Processing" state at any one time

- You are limited to importing a maximum file size of 10MB

Bulk API FAQs

Do I need special authentication to access the Bulk API?

No, you can use the same authentication that you normally use for the API to get an access token to use in subsequent Bulk API requests. You can then store this access token in your environment as a variable in Postman to make it easy to use in all other requests.

It is worth noting that only the API user who created the job can make requests related to that job e.g. querying status, retrieving data, etc.

How big are the files from the job?

The size of the job data will depend on the parameters you specify when creating the job e.g. the time frame or the number of activities or leads being exported. Once the "Query Job Status" request is complete it will show you the file size of the job in bytes so you then know before extracting the data how big the file will be.

Is there a maximum file size for job size?

No, there is no limit to the size of the job created when using the bulk extract API. The only constraint is that you are restricted to exporting a maximum of 500MB per day unless you purchased an additional quota. When using the bulk import API you are limited to importing 10MB at a time.

How can I tell how much of my daily 500MB extraction quota is left?

In order to tell how much of your bulk extract API quota you have used, you will need to make 4 requests to each of the Lead, Activity, Custom Object, and Program Member endpoints below to get a list of all jobs created within the last 7 days. Each of these jobs returned will contain a "filesize" attribute in bytes.

Start by filtering on jobs that have a "Completed" status and a "finishedAt" value with today's date, remembering that these "finishedAt" values are in UTC time, and then sum all of these values for the current day to see how much of your 500MB quota is left.

- Get Lead Jobs within the past 7 days

- Get Activity Jobs within the past 7 days

- Get Program Member Jobs within the past 7 days

- Get Custom Object Jobs within the past 7 days

Can the Bulk API pull data from all of the workspaces in my instance?

Yes, the Bulk API pulls data from all of your workspaces and it is not possible to limit the scope of your request to a particular workspace.

The content in this blog has been reviewed by the Community Manager to ensure that it is following Marketing Nation Community guidelines. If you have concerns or questions, please reach out to @Jon_Chen or comment down below.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices