- Marketing Nation

- :

- Products

- :

- Blogs

- :

- Product Blogs

- :

Reversing a data mangling disaster with Get Lead Changes + a regex trick

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

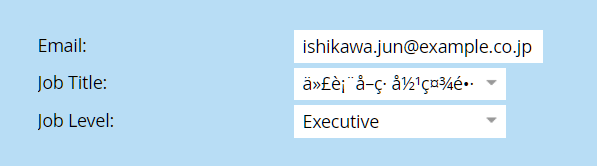

A classic data disaster. If you don’t make a study of encoding, it’ll make a study of you!

It was all fun and games until this:

Turned into this:

Here’s the old value translated into English, if you’re curious:

The new value, of course, is gibberish. A specific type of gibberish, to be sure — UTF-8 mistakenly decoded as ISO-8859-1 and then encoded to UTF-16, we think — but it can’t be repaired in place. (What happened here: nobody noticed list imports done on behalf of the Japanese satellite office — but not by people in that office, who understand the language — were persistently using broken encoding.)

Luckily, the Marketo REST API offers the Get Lead Changes endpoint, which returns the old and new values for every historical change to Person fields. It’s the only way, if you’re not regularly downloading your database and storing a daily delta yourself (and very few users are doing that!), to reverse unwanted changes.

Ah, but it’s not that easy. While it turned out there were only about 50,000 bad Data Value Changes activities like this, they stretched back 8 years!

We were able to narrow down the Get Lead Changes results to only include people whose current Country is Japan, by creating a static List and including that listID in the request.

But that still left us with hundreds of thousands of legit changes, which we definitely did not want to undo, interspersed with the 50K bad ones.

How can you pick out the only the bad changes? That’s where Unicode-aware regular expressions come in. We want the changes where the old value had at least one Japanese character, but the new value had none.

OK, full disclosure: the software I actually used for this project was the AbleBits extension for Excel. It’s great, it works, and IMO if you face a disaster like this one even once in your life, it’s well worth the 69 bucks.[1]

But in the interest of not trapping you into a commercial product, I decided to figure out how you’d do it in Google Sheets + Apps Script.

“Japanese character” in practice means characters from the Hiragana, Katakana, and Han Unicode scripts. Han characters are also used in Chinese, Korean and Vietnamese, so the mere presence of a Han glyph doesn’t signal Japanese, but Han plus the person’s Country being Japan is a very reliable indicator.

So, using the helpful guide from Localizing Japan, changing the provided \xNNNN format to JavaScript’s \uNNNN, we create the following Sheets custom function:

/**

* @customfunction

*/

function HAS_JAPANESE(cells) {

cells = Array.isArray(cells) ? cells : [cells];

return cells.map(function(cell){

return /[\u3041-\u3096]|[\u30A0-\u30FF]|[\u3400-\u4DB5\u4E00-\u9FCB\uF900-\uFA6A]/.test(cell);

});

}

That function populates 2 columns which we use for further filtering:

Not exactly smooth sailing, but it’s good to know that rather than just eyeballing which rows are the bad ones, a machine can do that for you.

NOTES

[1] Note AbleBits supports Unicode script shorthand for IsKatakana and IsHiragana but doesn’t currently support IsHan, so Han has to be spelled out using codepoint ranges.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices

![image[1].png image[1].png](https://nation.marketo.com/t5/image/serverpage/image-id/35089i1DFA41AFB282DC8C/image-size/large?v=v2&px=999)

![image-1[1].png image-1[1].png](https://nation.marketo.com/t5/image/serverpage/image-id/35090iEE7D2D6810ED0108/image-size/large?v=v2&px=999)

![2022-06-06-18_06_59---------translate---Google-Search[1].png 2022-06-06-18_06_59---------translate---Google-Search[1].png](https://nation.marketo.com/t5/image/serverpage/image-id/35092i84C863E54287036D/image-size/large?v=v2&px=999)

![image-2[1].png image-2[1].png](https://nation.marketo.com/t5/image/serverpage/image-id/35093iB120248F46993518/image-size/large?v=v2&px=999)

![2022-06-17-13_53_00-E__Temporary-Screenshots_2022-06-17-13_52_43-getLeadChanges_raw.xlsx---Excel.png[1].png 2022-06-17-13_53_00-E__Temporary-Screenshots_2022-06-17-13_52_43-getLeadChanges_raw.xlsx---Excel.png[1].png](https://nation.marketo.com/t5/image/serverpage/image-id/35094iDA18F3B1EE947F89/image-size/large?v=v2&px=999)