Re: Spam filters registering clicks?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solved! Go to Solution.

- Labels:

-

Lead Management

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are several posts here on Marketo about this issue, and my firm has been digging into it a lot over the last few days. The short answer is that yes, this does indeed happen - spam filters (like Barracuda) / bots / junk mail algorithms do indeed click on links in emails (see this interesting blog post from 2013 regarding the issue - Barracuda calls this "multilevel intent analysis"). The spam filter is looking for redirection or malware or something like that. There isn't a whole ton that we marketers can do about it, though. Here is what we've done and found:

- First thing we did was download the entire Marketo activity log using the API, put it in a database, and started dissecting the "Click Email" event types. We also sat down with the system administrator here to review some of this data. In short: there is nothing in the User Agent, Platform, Device, etc. that will help spot these.

- Then we started looking at the timing: what about people who click before they open? What about people who click really quickly after the "Send Email" activity is logged? Well...the "Send Email" event isn't indicative of when, exactly, the email leaves Marketo's servers, so that's not really accurate - you can't spot bots based on that.

- The best way we've found right now is to include a one-pixel picture / link on the email - invisible to just about everyone (as suggested here). Anything that clicks on such a tiny little pixel you can consider a bot. True; someone might not load images and see a box, but most people won't see it at all.

- Another possibility: see if you have a bunch of clicks that all happen at the same time (or people clicking every link in an email, every week - would a real person really need to read your Privacy Policy week-in and week-out?). Those are probably bots...but I personally would want to download the data into a real database before attempting this kind of query.

- One more (really complex) possibility: when we went to our sysadmin (the guy who runs our own company's Barracuda machine) about a lot of these issues, he started to "ping" some of the IP addresses included in the suspicious "Click Link In Email" activities. One or more of them shot back a response indicating that it was a Barracuda box. If you are really, super-duper concerned with this problem, it should be possible to download all Marketo activities via the API and write some custom script / code to extract the IP addresses from the Marketo "Click Email" events and then to periodically ping all these servers to see if you can get them to self-identify as a spam filter (parse the text-strings of the responses for incriminating evidence).

We have not done this last thing, as our "one-pixel" solution has indicated (at least over the last two weeks) that it's likely not a major issue. Perhaps some day, when our organization has unlimited resources (heh), we will pursue this last option, but the reality is that we have a lot going on and better things to do to add more value to our marketing efforts.

I would also like the data to exist in a perfect world - one where our Users validate our TRON Data Discs and we can take down the evil Master Control Programs while we're on our light-cycles on the grid - but that gleaming world of perfect, neon data does not exist. For most of us, I would guess this statistical aberration will not significantly affect our analysis of content effectiveness.

Hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Venus - we (meaning Becky Miner, our Marketo Queen, and myself - a humble court jester) have found that, many times, the suspected "bot" clicks are checking the first link in the entire email. So we've put our one-pixel link at the very front of the email, before any other links...we've caught a few flies in the trap so far.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am noticing that none of these links lead to a "visit page" activity (and it should).

... as mentioned in the other thread, you shouldn't expect a VWP activity just because you saw the Clicked Email. The anti-spam technology in use isn't going to download all libraries and do JS logging (in fact it would be a pretty good DoS attack against such services if it did).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is an important distinction to point out. I'm only looking at clicks that should result in a webpage visit (i.e., clicked link that contains armor.com should lead to a webpage visit containing armor.com). I checked the activity of a "normal" lead and the email click does lead to a webpage visit. At this point, I'm just trying to prevent these leads from scoring up from bot clicks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Venus. How did you actually set up your scoring for clicks in Marketo? I was looking to add a filter that contains "web activity" is not empty.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, posted too soon. I found "Visited Web Page".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

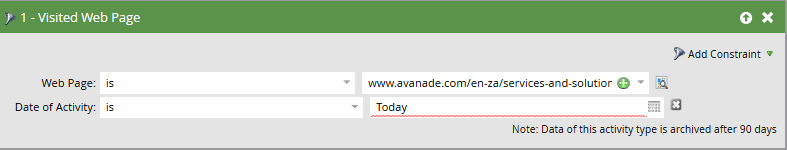

Hi Amanda, sorry I just saw this. It sounds like you found the solution. I have mine set up as below:

Trigger: Visited Web Page contains "abc.com"

Clicked link contains "abc.com" in last 5 minutes

I've done a few spot checks, and the flow appears to be working properly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I stumbled on a slightly different version of this solution last week, using a click trigger and then a wait and remove from flow step based on if the lead visited the page.

The only problem, though, is that today I've been looking through the leads that were removed from the flow, and some look like legitimate users - they clicked on the link several minutes after the email delivered, and registered an open, but never visited the web page.

I don't think the munchkin tracking code works 100% of the time. It would be best to just not count clicks either from before delivery, or within seconds of delivery.

Edit: Just set up an idea for this, if anyone wants to up-vote it:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution is as I described above and elsewhere. Anything that involves assumptions like "no one ever opens emails 30 seconds after they get them" isn't gonna work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've found a flaw in my Smart List. It's not capturing all leads who clicked and then visited a web page. I've spot checked several leads (half that triggered correctly and half that didn't) and cannot find any difference between the two sets...

I think I will have to rely solely on the Visited Web Page trigger and another filter like Member of a Program or Was Delivered Email.

It's frustrating that the Click Link in Email activity is getting very unreliable as an indicator of intent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Venus,

I set my smart list up in the opposite manner and it so far has seemed to catch everyone I've spot checked:

Trigger: Clicks link in email is "abc.com"

Visited webpage is "abc.com" in timeframe Today

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since clicking on "Today" in the calendar that pops up will insert today's date, will this date dynamically adjust each day. And what about time zones. Let's say someone in China clicks on a link in an email that's received a day prior (in our Marketo local time zone), will this adjust for the lead's time zone?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dan,

The timeframe option is dynamic - you don't actually have a calendar pop-up and choose a specific date. Instead, it's actually the word, "Today", and acts like a token to pull in today's date. You bring up a very good point about timezones, but it is based off of the time the activity was logged in your instance of Marketo.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's what I thought, Conor. But when I entered "Today" in the field, Marketo indicated it was invalid with a red squiggly:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dan,

Change "is" to "in time frame" and you will have the option to select today.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah great - thanks for catching this Conor!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Conor Fitzpatrick (and others who have participated in this thread), we had to remove this filter from our campaigns. We were finding in many instances, Marketo wasn't logging the "visited web page" activity upwards of 5 minutes after the click link activity. Therefore, the trigger campaigns weren't triggering with this filter in place. Thought we had this one solved.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well that's a huge bummer. I wonder if it has to do with the munchkin 2.0 updates? I haven't tested it post-release, but I will now to see if we get a different result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The thing is, Munchkin v2 has yet to be rolled out to anyone yet. There was a minor change made today, but I doubt that's affecting this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmmm... Do you think it has anything to do with your visit volume? Our web traffic volume is quite low right now as we're in the process of growing our content strategy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, Conor. I can't believe I didn't think to try that!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remember, if you're going to use this method, all of your links have to be expected to leave Visited Web Page tracks. Direct PDF links, for example, do not.

- Copyright © 2025 Adobe. All rights reserved.

- Privacy

- Community Guidelines

- Terms of use

- Do not sell my personal information

Adchoices